A PC running x64-based Windows 11 with an Intel Core i5-12500H 2.40GHz CPU, 24GB RAM, and other characteristics was used for the experiments. Spyder was employed to obtain the outcomes. The VEDAI and VAID datasets are three benchmark datasets that the system used to assess the performance of the suggested architecture. All three datasets undergo k-fold cross-validation to evaluate the dependability of our suggested system. In this section, the system is compared to other state-of-the-art technologies, the dataset is discussed, and the experiments are explained.

4.2 Dataset descriptionIn the following subsection, we furnish comprehensive and detailed descriptions of each dataset utilized in our study. Each dataset is meticulously introduced, emphasizing its distinctive characteristics, data sources, and collection methods.

4.2.1 UAVID datasetThe UAVID dataset offers a high-resolution view of urban environments for semantic segmentation tasks. It comprises 30 video sequences capturing 4 K images (meaning a resolution of 3,840 × 2,160 pixels) from slanted angles. Each frame is densely labeled with 8 object categories: buildings, roads, static cars, trees, low vegetation, humans, moving cars, and background clutter. The dataset provides 300 labeled images for training and validation, with the remaining video frames serving as the unlabeled test set. This allows researchers to train their models on diverse urban scenes and evaluate their performance on unseen data (Yang et al., 2021) (Figure 10).

Figure 10. Sample images frame from the UAVID dataset.

4.2.2 VAID datasetThe VAID collection featured six separate vehicle image categories such as minibus, truck, sedan, bus, van, and car (Lin et al., 2020). These images are obtained by a drone in different illumination circumstances. The drone was situated between 90 and 95 meters above the earth’s surface. The resolution of images taken at 23.98 frames per second is 2,720 × 1,530. The dataset offers statistics on the state of the roads and traffic at 10 sites in southern Taiwan. The traffic images illustrate an urban setting, an educational campus, and a suburban town (Figure 11).

Figure 11. Sample images frame from the VAID dataset.

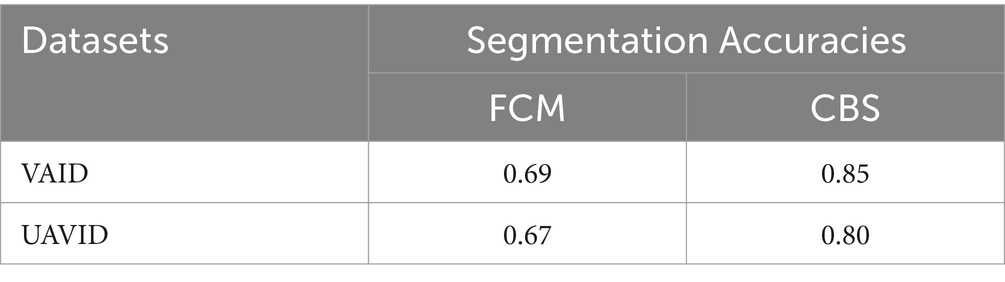

5 Results and analysis 5.1 Experiment I: semantic segmentation accuracyThe CBS and FCM algorithms were compared and assessed in terms of segmentation accuracy and computational time. FCM requires training on a bespoke dataset, increasing the model’s computing cost as compared to CBS. Furthermore, CBS produced superior segmentation results than FCM, therefore we utilized the CBS findings for future investigation. Table 2 shows the accuracy of both segmentation strategies.

Table 2. Accuracies comparison of FCM and CBS segmentation.

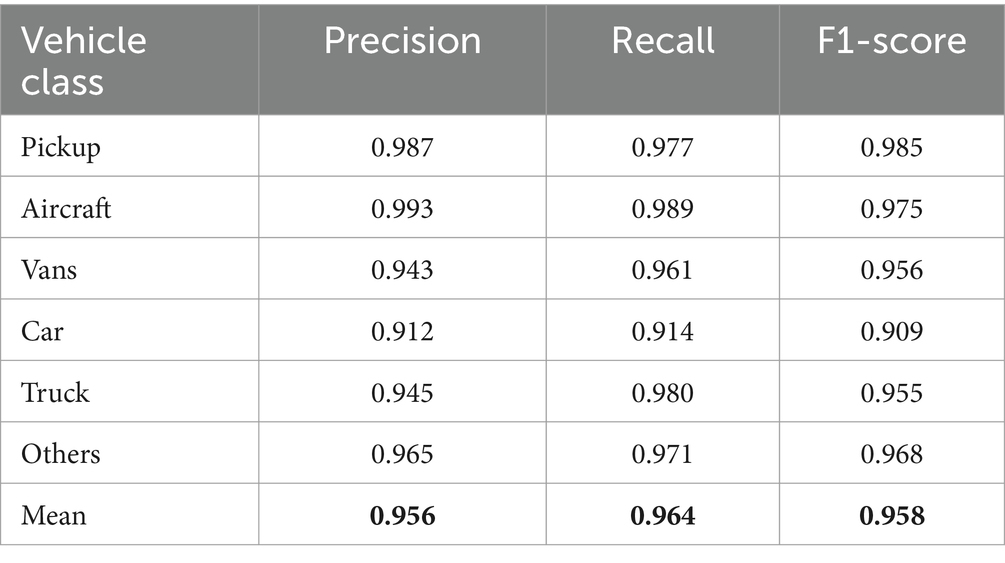

5.2 Experiment II: precision, recall, and F1 scoresThe effectiveness of vehicle detection and tracking has been assessed using these evaluation metrics, namely Precision, Recall, and F1 score as calculated by using Equations 13, 14, and 15 below:

Precision=∑TP∑TP+∑FP (13) Recall=TPTP+FN (14) F1Score=2Precision×RecallPrecision+Recall (15)Table 3 shows vehicle detection’s precision, recall, and F1 scores. True Positive indicates how many cars are effectively identified. False Positives signify other detections besides cars, whereas False Negatives shows missing vehicles count. The findings indicate that this suggested system can accurately detect cars of varying sizes (Table 4).

Table 3. Overall accuracy, precision, recall, and F1-score for vehicle detection over the UAVID dataset.

Table 4. Overall accuracy, precision, recall, and F1-score for vehicle detection over the VAID dataset.

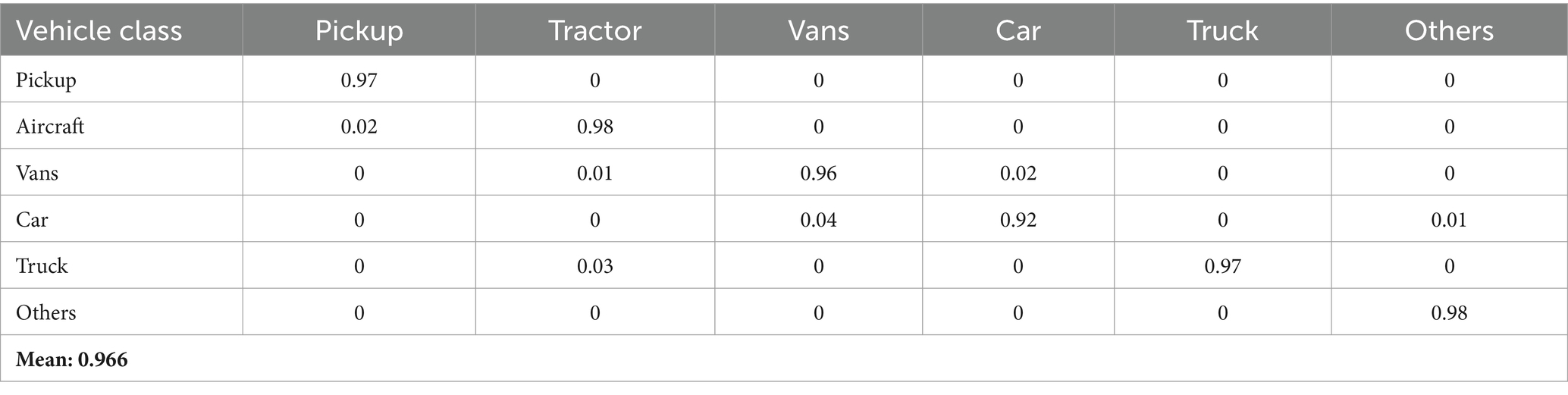

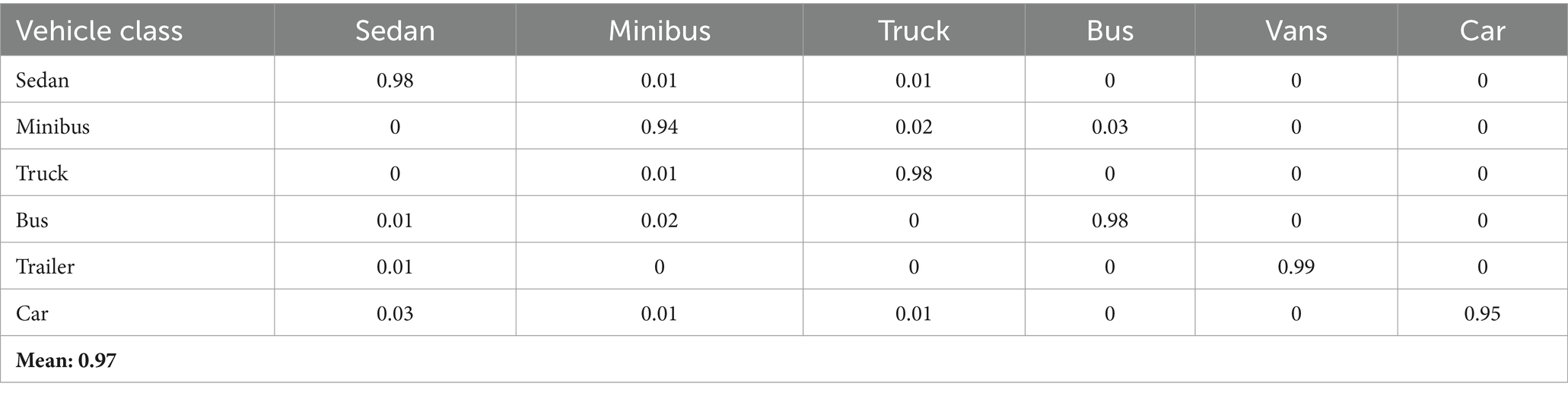

5.3 Experiment IV: confusion matrixTables 5, 6 provide comprehensive confusion matrices that illustrate the performance of our vehicle classification methods on the UAVID and VAID datasets, respectively. These matrices reveal the precision of our classification by indicating how frequently vehicles from different classes are correctly identified (diagonal elements) as opposed to being misclassified (off-diagonal elements). Table 5 highlights that our proposed method achieved high precision across various vehicle classes, culminating in an impressive overall mean precision of 0.966. Similarly, Table 6 showcases the accuracy of our suggested method, achieving a mean precision of 0.97. This demonstrates robust performance across multiple vehicle types. These results underscore the efficacy of our classification algorithms in accurately identifying and categorizing different vehicle classes, thus affirming their reliability and effectiveness in diverse applications.

Table 5. Confusion matrix illustrating the precision of our proposed vehicle classification approach on the UAVID dataset.

Table 6. Confusion matrix demonstrated our suggested vehicle categorization method’s accuracy using the VAID dataset.

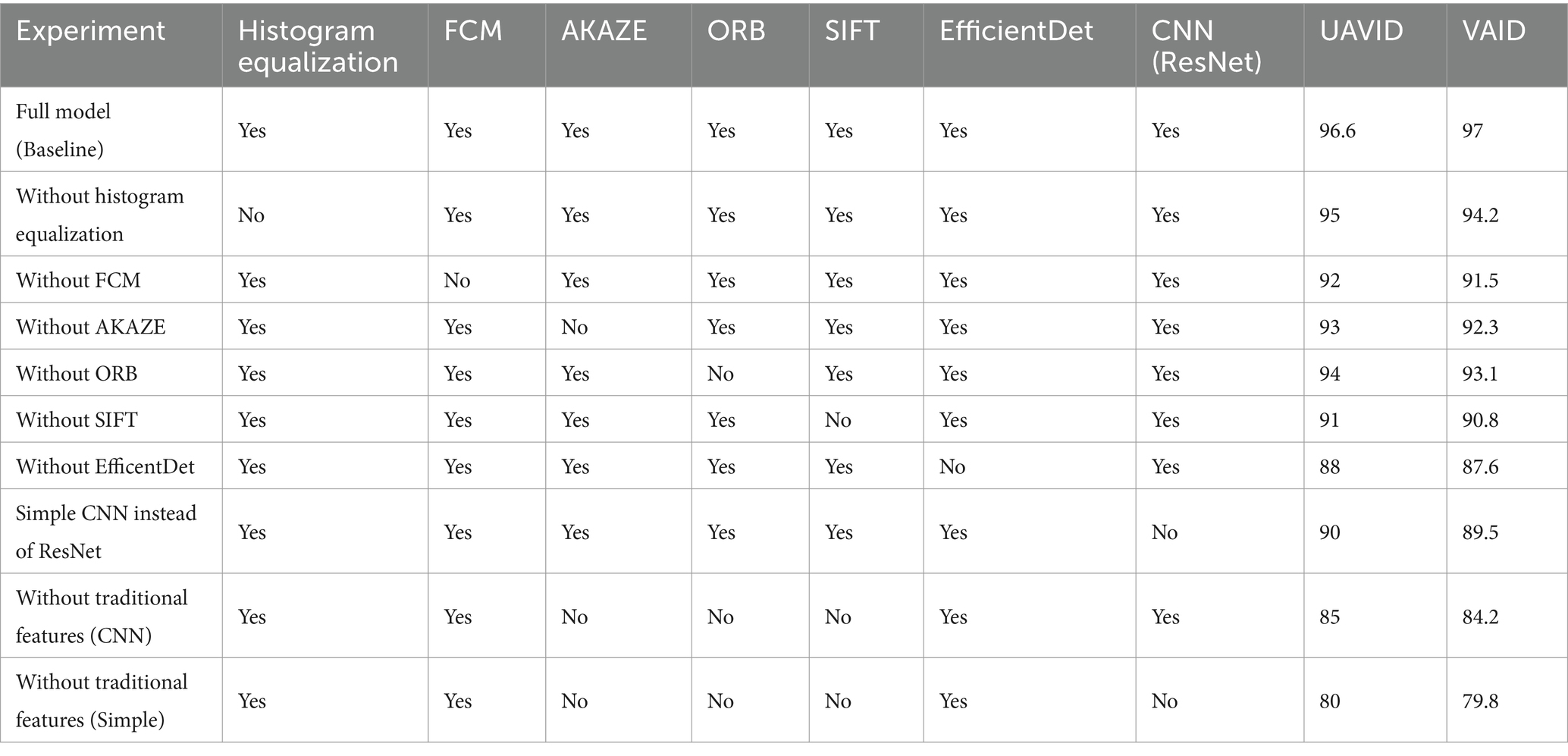

5.4 Experiment V: ablation study experimentThe ablation study in Table 7 evaluates the performance of our model by systematically removing individual components. Each row represents a version of the model with a specific component removed, and the corresponding accuracy is measured on the UAVID and VAID datasets. This table demonstrates the importance of each component in achieving high accuracy.

Table 7. Ablation study experiment of all methods on UAVID and VAID datasets.

The ablation study presented in Table 7 demonstrates the robustness and effectiveness of the proposed model components on the UAVID and VAID datasets. Removing individual components such as histogram equalization, FCM, AKAZE, ORB, SIFT, and EfficientDet significantly degrades the model performance, indicating their essential contributions. Notably, the absence of EfficientDet results in the most substantial drop in accuracy, underscoring its critical role in the detection pipeline. Additionally, substituting the ResNet backbone with a simpler CNN architecture leads to a noticeable decline in performance, highlighting the importance of using a sophisticated feature extractor. These findings validate the necessity of the integrated components and their synergistic effect in achieving high accuracy for UAV-based vehicle detection.

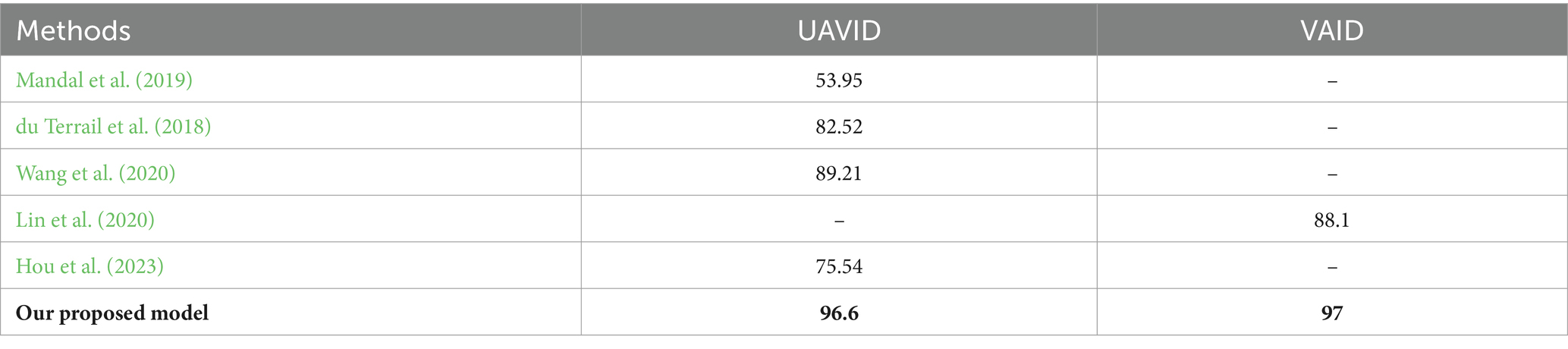

5.5 Comparison with other state-of-the-art methodsTable 8 compares our proposed model’s performance with existing state-of-the-art methods. The figures for our model are consistent with those in Table 7.

Table 8. Comparison of the proposed method with existing methods on UAVID and VAID datasets.

Research welcoming cross-validation for the results portrays the robustness of the model for the vehicle detection and aerial images classification. Application of EfficientDet, which is well-known for object identification of various sizes and appearances intensity, gives our approach more credibility. Furthermore, obtaining important features of the surrounding environment along with the form and texture of the objects improves categorization accuracy to the maximum extent.

5.6 Detailed analysis of the comparison with other state-of-the-art methodsTable 8 provides a comparison of our proposed method with existing methods on the UAVID and VAID datasets. The results highlight the significant improvement in performance achieved by our approach:

1. Superior Performance on UAVID Dataset: Our suggested model obtains an accuracy of 96.6%, which is substantially greater than the accuracies produced by existing state-of-the-art approaches such as Mandal et al. (53.95%), Terrail et al. (82.52%), Wang et al. (89.21%), and Hou et al. (75.54%). This highlights the stability and efficacy of our approach in managing the intricacies of the UAVID dataset.

2. Outstanding Results on VAID Dataset: For the VAID dataset, our technique obtains an accuracy of 97%, exceeding Lin et al.’s method, which achieved 88.1%. This suggests that our technique is extremely successful in vehicle identification and classification under varied environmental circumstances and vehicle kinds as documented in the VAID dataset.

The benefit of our suggested strategy is further underlined utilizing EfficientDet for vehicle detection. EfficientDet’s compound scaling method, efficient feature fusion, and usage of focus loss contribute to its outstanding performance in object identification tasks, as seen by the high accuracy and recall rates attained on both datasets. Moreover, the combination of modern methods such as Histogram Equalization, FCM, AKAZE, ORB, and SIFT in our model further strengthens its power to effectively recognize and categorize automobiles in aerial images.

Overall, the results in Table 8 clearly demonstrate the superiority of our proposed method over existing methods, providing a robust solution for vehicle detection and classification in UAV-based surveillance systems.

6 Discussion/research limitationFor effective traffic monitoring based on aerial images, our suggested model is an efficient solution. While catering to high-definition aerial images, object detection is one of the most difficult problems. To get efficient results, we devised a technique that combines contour based semantic segmentation with CNN classification. However, the suggested technique has significant limitations. First and foremost, the system has only been evaluated with RGB shots acquired during the daytime. Analyzing video or pictorial datasets in low-light conditions or at night can further confirm this proposed technique as a lot of researchers already have succeeded with such datasets. Furthermore, our segmentation and identification system have problems with partial or complete occlusions, tree-covered roadways, and similar items (Figure 12).

Figure 12. Limitations of our proposed model: (A) vehicle not detected due to Occlusion; car covered with tree (B) Car not fully in frame.

7 ConclusionThis study presents a novel method for classifying and identifying vehicles in aerial image sequences by utilizing cutting-edge approaches at each stage. The model starts by applying Histogram Equalization and noise reduction techniques to pre-process aerial images. After segmenting the image using Fuzzy C-Means (FCM) and Contour based segmentation (CBS) to reduce image complexity, EfficientDet is used for vehicle detection. Oriented FAST, Rotated BRIEF, Scale Invariant Feature Transform (SIFT), and AKAZE (Accelerated-KAZE) are used to extract features from detected vehicles (ORB). Convolutional Neural Networks (CNNs) are used in the classification phase to create a strong system that can correctly classify cars. Promising results are obtained with the proposed methodology: 96.6% accuracy on the UAVID dataset and 97% accuracy on the VAID dataset. Future enhancements to the system could involve incorporating additional features to boost classification accuracy and conducting training with a broader range of vehicle types. Moving forward, our aim is to explore reliable methodologies and integrate more features into the system to enhance its efficacy, aspiring for it to become the industry standard across a spectrum of traffic scenarios.

Data availability statementPublicly available datasets were analyzed in this study. This data can be found here: https://www.kaggle.com/datasets/dasmehdixtr/uavid-v1.

Author contributionsMY: Data curation, Investigation, Writing – original draft. MH: Formal analysis, Software, Writing – original draft. NA: Investigation, Resources, Writing – review & editing. TS: Conceptualization, Data curation, Writing – review & editing. BA: Conceptualization, Methodology, Writing – review & editing. HR: Methodology, Writing – review & editing. AA: Methodology, Software, Writing – review & editing.

FundingThe author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by the Deanship of Scientific Research at Najran University, under the Research Group Funding program grant code (NU/PG/SERC/13/30). Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2024R440), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

AcknowledgmentsPrincess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2024R440), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Conflict of interestThe authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s noteAll claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

ReferencesAbbas, Y., and Jalal, A. (2024). “Drone-based human action recognition for surveillance: a multi-feature approach” in 2024 International conference on Engineering & Computing Technologies (ICECT) (Islamabad, Pakistan: IEEE), 1–6.

Abbasi, A. A., and Jalal, A. (2024). Data driven approach to leaf recognition: logistic regression for smart agriculture, International conference on advancements in computational Sciences (ICACS), Lahore, Pakistan, pp. 1–7

Afsar, M. M., Saqib, S., Ghadi, Y. Y., Alsuhibany, S. A., Jalal, A., and Park, J. (2022). Body worn sensors for health gaming and e-learning in virtual reality. CMC 73:3. doi: 10.32604/cmc.2022.028618

Crossref Full Text | Google Scholar

Ahmed, M. W., and Jalal, A. (2024a). “Dynamic adoptive Gaussian mixture model for multi-object detection over natural scenes” in 2024 5th International Conference on Advancements in Computational Sciences (ICACS). (Lahore, Pakistan: IEEE), 1–8.

Ahmed, M. W., and Jalal, A. (2024b). “Robust object recognition with genetic algorithm and composite saliency map” in 5th international conference on advancements in computational Sciences (ICACS) (Lahore, Pakistan: IEEE), 1–7.

Al Mudawi, N., Ansar, H., Alazeb, A., Aljuaid, H., AlQahtani, Y., Algarni, A., et al. (2024). Innovative healthcare solutions: robust hand gesture recognition of daily life routines using 1D CNN. Front. Bioeng. Biotechnol. 12:1401803. doi: 10.3389/fbioe.2024.1401803

PubMed Abstract | Crossref Full Text | Google Scholar

Al Mudawi, N., Tayyab, M., Ahmed, M. W., and Jalal, A. (2024). Machine learning based on body points estimation for sports event recognition, IEEE international conference on autonomous robot systems and competitions (ICARSC). Paredes de Coura, Portugal: IEEE, 120–125.

Alarfaj, M., Pervaiz, M., Ghadi, Y. Y., Al Shloul, T., Alsuhibany, S. A., Jalal, A., et al. (2023). Automatic anomaly monitoring in public surveillance areas. Intell. Autom. Soft Comput. 35, 2655–2671. doi: 10.32604/iasc.2023.027205

Crossref Full Text | Google Scholar

Alazeb, A., Bisma, C., Naif Al, M., Yahya, A., Alonazi, M., Hanan, A., et al. (2024). Remote intelligent perception system for multi-objects detection. Front. Neurorobot. 18:1398703. doi: 10.3389/fnbot.2024.1398703

PubMed Abstract | Crossref Full Text | Google Scholar

Ali, S., Hanzla, M., and Rafique, A. A. (2022) Vehicle detection and tracking from UAV imagery via Cascade classifier, In 24th international multitopic conference (INMIC) pp. 1–6

Almujally, N. A., Khan, D., Al Mudawi, N., Alonazi, M., Alazeb, A., Algarni, A., et al. (2024). Biosensor-driven IoT wearables for accurate body motion tracking and localization. Biosensor 24:3032. doi: 10.3390/s24103032

PubMed Abstract | Crossref Full Text | Google Scholar

Alshehri, M. S., Yusuf, M. O., and Hanzla, M. (2024). Unmanned aerial vehicle detection and tracking using image segmentation and Bayesian filtering, In 4th interdisciplinary conference on electrics and computer (INTCEC), 2024, pp. 1–6

An, J., Choi, B., Kim, H., and Kim, E. (2019). A new contour-based approach to moving object detection and tracking using a low-end three-dimensional laser scanner. IEEE Trans. Veh. Technol. 68, 7392–7405. doi: 10.1109/TVT.2019.2924268

Crossref Full Text | Google Scholar

Ansar, H., Ksibi, A., Jalal, A., Shorfuzzaman, M., Alsufyani, A., Alsuhibany, S. A., et al. (2022). Dynamic hand gesture recognition for smart Lifecare routines via K-Ary tree hashing classifier. Appl. Sci. 12:6481. doi: 10.3390/app12136481

Crossref Full Text | Google Scholar

Bai, L., Han, P., Wang, J., and Wang, J. (2024). Throughput maximization for multipath secure transmission in wireless ad-hoc networks. IEEE Trans. Commun. :1. doi: 10.1109/TCOMM.2024.3409539

Crossref Full Text | Google Scholar

Cai, D., Li, R., Hu, Z., Lu, J., Li, S., and Zhao, Y. (2024). A comprehensive overview of core modules in visual SLAM framework. Neurocomputing 590:127760. doi: 10.1016/j.neucom.2024.127760

Crossref Full Text | Google Scholar

Chen, J., Song, Y., Li, D., Lin, X., Zhou, S., and Xu, W. (2024). Specular removal of industrial metal objects without changing lighting configuration. IEEE Trans. Industr. Inform. 20, 3144–3153. doi: 10.1109/TII.2023.3297613

Crossref Full Text | Google Scholar

Chen, J., Wang, Q., Cheng, H. H., Peng, W., and Xu, W. (2022a). A review of vision-based traffic semantic understanding in ITSs. IEEE Trans. Intell. Transp. Syst. 23, 19954–19979. doi: 10.1109/TITS.2022.3182410

Crossref Full Text | Google Scholar

Chen, J., Wang, Q., Peng, W., Xu, H., Li, X., and Xu, W. (2022b). Disparity-based multiscale fusion network for transportation detection. IEEE Trans. Intell. Transp. Syst. 23, 18855–18863. doi: 10.1109/TITS.2022.3161977

Crossref Full Text | Google Scholar

Chen, J., Xu, M., Xu, W., Li, D., Peng, W., and Xu, H. (2023). A flow feedback traffic prediction based on visual quantified features. IEEE Trans. Intell. Transp. Syst. 24, 10067–10075. doi: 10.1109/TITS.2023.3269794

Crossref Full Text | Google Scholar

Chien, H. -J., Chuang, C. -C., Chen, C. -Y., and Klette, R. (2016). When to use what feature? SIFT, SURF, ORB, or A-KAZE features for monocular visual odometry, international conference on image and vision computing New Zealand (IVCNZ). Palmerston North, New Zealand: IEEE, 1–6.

Chughtai, B. R., and Jalal, A. (2023). “Object detection and segmentation for scene understanding via random Forest” in International conference on advancements in computational Sciences (ICACS) (Lahore, Pakistan: IEEE), 1–6.

Chughtai, B. R., and Jalal, A. (2024). “Traffic surveillance system: robust multiclass vehicle detection and classification” in International conference on advancements in computational Sciences (ICACS) (Lahore, Pakistan: IEEE), 1–8.

Ding, Y., Zhang, W., Zhou, X., Liao, Q., Luo, Q., and Ni, L. M. (2021). FraudTrip: taxi fraudulent trip detection from corresponding trajectories. IEEE Internet Things J. 8, 12505–12517. doi: 10.1109/JIOT.2020.3019398

Crossref Full Text | Google Scholar

du Terrail, J.O., and Jurie, F., (2018). Faster RER-CNN: application to the detection of vehicles in aerial images. In Proceedings of the 24th international conference on pattern recognition (ICPR 2018), pp. 2092–2097

Gong, H., Zhang, Y., Xu, K., and Liu, F. (2018). A multitask cascaded convolutional neural network based on full frame histogram equalization for vehicle detection. Chinese Automation Congress. 2848–2853. doi: 10.1109/CAC.2018.8623118

Crossref Full Text | Google Scholar

Guo, S., Wang, S., Yang, Z., Wang, L., Zhang, H., Guo, P., et al. (2022). A review of deep learning-based visual multi-object tracking algorithms for autonomous driving. Appl. Sci. 12:10741. doi: 10.3390/app122110741

Crossref Full Text | Google Scholar

Hanzla, M., Ali, S., and Jalal, A. (2024a). Smart traffic monitoring through drone images via Yolov5 and Kalman filter, In 5th international conference on advancements in computational Sciences (ICACS), Lahore, Pakistan: IEEE, pp. 1–8.

Hanzla, M., Yusuf, M. O., Al Mudawi, N., Sadiq, T., Almujally, N. A., Rahman, H., et al. (2024b). Vehicle recognition pipeline via DeepSort on aerial image datasets. Front. Neurorobot. 18:1430155. doi: 10.3389/fnbot.2024.1430155

Crossref Full Text | Google Scholar

Hanzla, M., Yusuf, M. O., and Jalal, A. (2024c). “Vehicle surveillance using U-NET segmentation and DeepSORT over aerial images” in International conference on Engineering & Computing Technologies (ICECT) (Islamabad, Pakistan), 1–6.

Hashmi, S. J., Alabdullah, B., Al Mudawi, N., Algarni, A., Jalal, A., and Liu, H. (2024). Enhanced data mining and visualization of sensory-graph-Modeled datasets through summarization. Sensors 24:4554. doi: 10.3390/s24144554

PubMed Abstract | Crossref Full Text | Google Scholar

He, H., Li, X., Chen, P., Chen, J., Liu, M., and Wu, L. (2024). Efficiently localizing system anomalies for cloud infrastructures: a novel dynamic graph transformer based parallel framework. J. Cloud Comput. 13:115. doi: 10.1186/s13677-024-00677-x

Crossref Full Text | Google Scholar

Hou, S., Fan, L., Zhang, F., and Liu, B. (2023). An improved lightweight YOLOv5 for remote sensing images. Remote Sens. 15.

Hou, X., Xin, L., Fu, Y., Na, Z., Gao, G., Liu, Y., et al. (2023). A self-powered biomimetic mouse whisker sensor (BMWS) aiming at terrestrial and space objects perception. Nano Energy 118:109034. doi: 10.1016/j.nanoen.2023.109034

Crossref Full Text | Google Scholar

Huang, D., Zhang, Z., Fang, X., He, M., Lai, H., and Mi, B. (2023). STIF: a spatial–temporal integrated framework for end-to-end Micro-UAV trajectory tracking and prediction with 4-D MIMO radar. IEEE Internet Things J. 10, 18821–18836. doi: 10.1109/JIOT.2023.3244655

Crossref Full Text | Google Scholar

Jahan, N., Islam, S., and Foysal, M. F. A., (2020). Real-time vehicle classification using CNN, In 11th international conference on computing, communication and networking technologies (ICCCNT), Kharagpur, India, pp. 1–6

Javadi, S., Rameez, M., Dahl, M., and Pettersson, M. I. (2018). Vehicle classification based on multiple fuzzy c-means clustering using dimensions and speed features. Procedia Comput. Sci. 126, 1344–1350. doi: 10.1016/j.procs.2018.08.085

Crossref Full Text | Google Scholar

Javed Mehedi Shamrat, F. M., Chakraborty, S., Afrin, S., Moharram, M. S., Amina, M., and Roy, T. (2022). A model based on convolutional neural network (CNN) for vehicle classification. Congress on intelligent systems: Proceedings of CIS 2021. Singapore: Springer Nature Singapore.

Jin, S., Wang, X., and Meng, Q. (2024). Spatial memory-augmented visual navigation based on hierarchical deep reinforcement learning in unknown environments. Knowl.-Based Syst. 285:111358. doi: 10.1016/j.knosys.2023.111358

Crossref Full Text | Google Scholar

Kamal, S., and Jalal, A. (2024). “Multi-feature descriptors for human interaction recognition in outdoor environments” in International conference on Engineering & Computing Technologies (ICECT) (Islamabad, Pakistan: IEEE), 1–6.

Khan, D., Al Mudawi, N., Abdelhaq, M., Alazeb, A., Alotaibi, S. S., Algarni, A., et al. (2024b). A wearable inertial sensor approach for locomotion and localization recognition on physical activity. Sensors 24:735. doi: 10.3390/s24030735

Comments (0)