In recent years, the integration of neural network models in autonomous systems has revolutionized various fields, including robotics, and computer vision, enabling machines to perform complex tasks in dynamic environments (Gan et al., 2024; Saleem et al., 2023; Babaee Khobdeh et al., 2021). One of the most promising applications of these advancements is in sports analytics, where intelligent systems can autonomously monitor, analyze, and interpret athlete movements. These systems not only provide precise technical guidance to athletes, coaches, and analysts, but also assist referees in making fairer decisions during matches, enhancing the fairness and accuracy of the game. The growing field of autonomous sports analysis leverages machine learning and neural network models to optimize training, improve competition outcomes, and enhance the overall fan experience. Among these sports, basketball stands out as one of the most popular and globally influential. Accurate recognition of basketball players' movements during training and games is critical for coaches, enabling them to design targeted training plans and strategies based on objective data rather than subjective observations. Traditionally, coaches have relied on their experience and time-intensive manual analysis to evaluate player performance, leading to inefficiencies and potential inaccuracies (Wei et al., 2016). Leveraging computer vision technology for autonomous recognition of player movements addresses these challenges, offering a foundation for advanced applications such as automatic detection of key game events, intelligent analysis of basketball tactics, and automatic generation of game highlights. These innovations, powered by neural network models, can significantly enhance both the technical level of athletes and the overall spectacle of the game (Li and Zhang, 2019). Additionally, these advancements in action recognition hold great potential for broader applications in autonomous robotic systems, where accurate, real-time recognition of human actions is essential for intelligent decision-making and interaction in complex, unstructured environments (Jain et al., 2023; Wang et al., 2022).

Basketball action recognition techniques can be divided into two main routes: the first is recognition using inertial sensors and the second is recognition using the feature extraction from video or image. Li and Gu (2021) used inertial sensors to measure the acceleration and angular velocity of the arm to help recognize basketball actions. Gun (2021) proposed the integration of a Field Programmable Gate Array (FPGA) into a network of two data streams to find the optimal region for recognizing basketball actions. These methods require athletes to be equipped with specific sensors that collect data and send it to a processing device for action analysis, but is not suitable for general use due to its equipment dependency. Liu and Wang (2023) used wearable sensors to capture user motion data, then optimize the model to analyse and recognize user behavior through SVM algorithm. Liu and Liu (2023) leveraged the wearable device to collect three-axis acceleration data and three-axis angular velocity data of the basketball player for action recognition. Jiang and Zhang (2023) proposed a scientific structure for classifying motion postures is proposed, which leads to the establishment of a data information acquisition module based on inertial sensors. The convolutional neural network is then improved using principal component analysis, and finally the improved algorithm is applied to identify basketball poses.

The methods based on feature extraction use video or image captured by a camera, from which hidden features are extracted and then recognized using a neural network classifier. This type of method is currently the most widely used in action recognition. Researchers are beginning to extend 2D convolution to 3D convolution to capture features in the time dimension, e.g., C3D (Tran et al., 2015), TSN (Wang et al., 2016), I3D (Carreira and Zisserman, 2017), P3D (Qiu et al., 2017), Non-local (Wang et al., 2018), R(2+1)D (Tran et al., 2018), R3D (Hara et al., 2018), and SlowFast (Feichtenhofer et al., 2019). DeepVideo (Karpathy et al., 2014) is one of the first attempts to use convolutional neural networks for video. C3D (Convolutional 3D) is currently the most popular neural network for extracting video features, as it is capable of extracting features in the temporal dimension in addition to the spatial dimension compared to 2D convolution (Fan et al., 2016; Li et al., 2017; Xu et al., 2017; de Melo et al., 2019; Yang et al., 2021). Donahue et al. (2015) explored the most suitable C3D convolution kernel size for action recognition through experimental studies. In order to further improve the representation and generalization ability, researchers have successively proposed 3D residual convolutional network (Tran et al., 2017) and pseudo-3D residual network (Qiu et al., 2017). Wu et al. (2020) proposed a dual-stream 3D convolutional network that uses optical flow information to obtain global and local action features. Gu et al. (2020) introduced the Navigator-Teacher-Scrutiniser Network into the dual-stream network with the aim of focusing on the most informative regions for fine-grained action recognition. In recent years, the most significant attention-based Transformer model has been proposed by Vaswani et al. (2017). Using Transformer's multi-head self-attention layer, it is possible to compute a representation of a sequence by aligning words in the sequence with other words in the sequence. It performs better in terms of representation and uses less computational resources than convolutional and recurrent operations.The success of the Transformer model inspired the computer vision community to test it on video tasks, such as ViVIT (Arnab et al., 2021), Video-Swin (Liu et al., 2022), and TimesFormer (Bertasius et al., 2021). Moreover, Peng et al. (2020) proposed a novel spatial-temporal GCN (ST-GCN) architecture for 3D skeleton-based action recognition, which is able to better model the latent anatomy of the structure data. Huang et al. (2023) utilized ensemble models based on hypergraph-convolution Transformer for the MiG classification from human skeleton data. The method effectively extracts subtle dynamic features from different gestures by enhancing the attention mechanism and multi-model fusion techniques.

Although deep learning-based algorithms are capable of recognizing basketball actions, they face some specific challenges such as dealing with highly similar complex backgrounds, subtle action differences within frames, and inconsistent lighting conditions. These factors significantly impact the accuracy and robustness of action recognition in basketball videos. To address these issues, we propose an Adaptive Context-Aware Network (ACA-Net) deep neural network for basketball action recognition to solve the problem that the current popular methods unable to extract sufficient features and fails to capture long term temporal information, resulting in low accuracy in recognizing basketball player's actions. The main contributions of this paper are as follows:

1. We propose an adaptive context-aware network (ACA-Net) for basketball action recognition. By aggregating long short-term temporal features with spatial-channel features, the network can effectively recognize basketball actions guided by video contextual information.

2. We propose a long short-term adaptive learning mechanism. To address the problem of difficulty in determining similar basketball actions from short-term temporal information, it first filters features through a convolutional gating mechanism, then generates a dynamic convolutional kernel for video adaptation to aggregate temporal information and capture long-term dependencies in videos, and finally generates importance weights from short-term temporal information to enhance temporal feature representation. Thus, it can adaptively integrate contextual information from different time scales to enhance the model's ability to capture long-term temporal information.

3. We propose a triplet spatial-channel integration strategy, which performs cross-dimensional interactions between spatial and channel dimensions, and spatial intrinsic dimensions on each of the three branches, which complementarily improves the representation of the features in the network. Thus it can efficiently extract more discriminative feature representations from basketball videos with a large number of similar backgrounds.

The rest of the paper is organized as follows. Section 2 introduces the adaptive context-aware network, the long short-term adaptive learning mechanism, and the triplet spatial-channel interaction strategy. Section 3 introduces the datasets, evaluation metrics, and the experiments to verify the effectiveness of the methods in this paper. Sections 4, 5 introduce the discussion and conclusions of this study.

2 Methodology 2.1 The overview of adaptive context-aware networkAs we discussed in Section 1, basketball videos pose difficulties in modeling the long-term dependency of the videos as well as discriminative feature representations due to the complexity of the background and the similarity of the actions. Therefore, we aim to address the above problems by introducing a long short-term adaptive (LSTA) module and a triplet spatial-channel interaction module. The two proposed modules can be easily integrated into existing 2D CNNs, such as ResNet (He et al., 2016), to form a network architecture that can efficiently process basketball videos. We will give an overview of ACA-Net and then describe the technical details of the proposed modules.

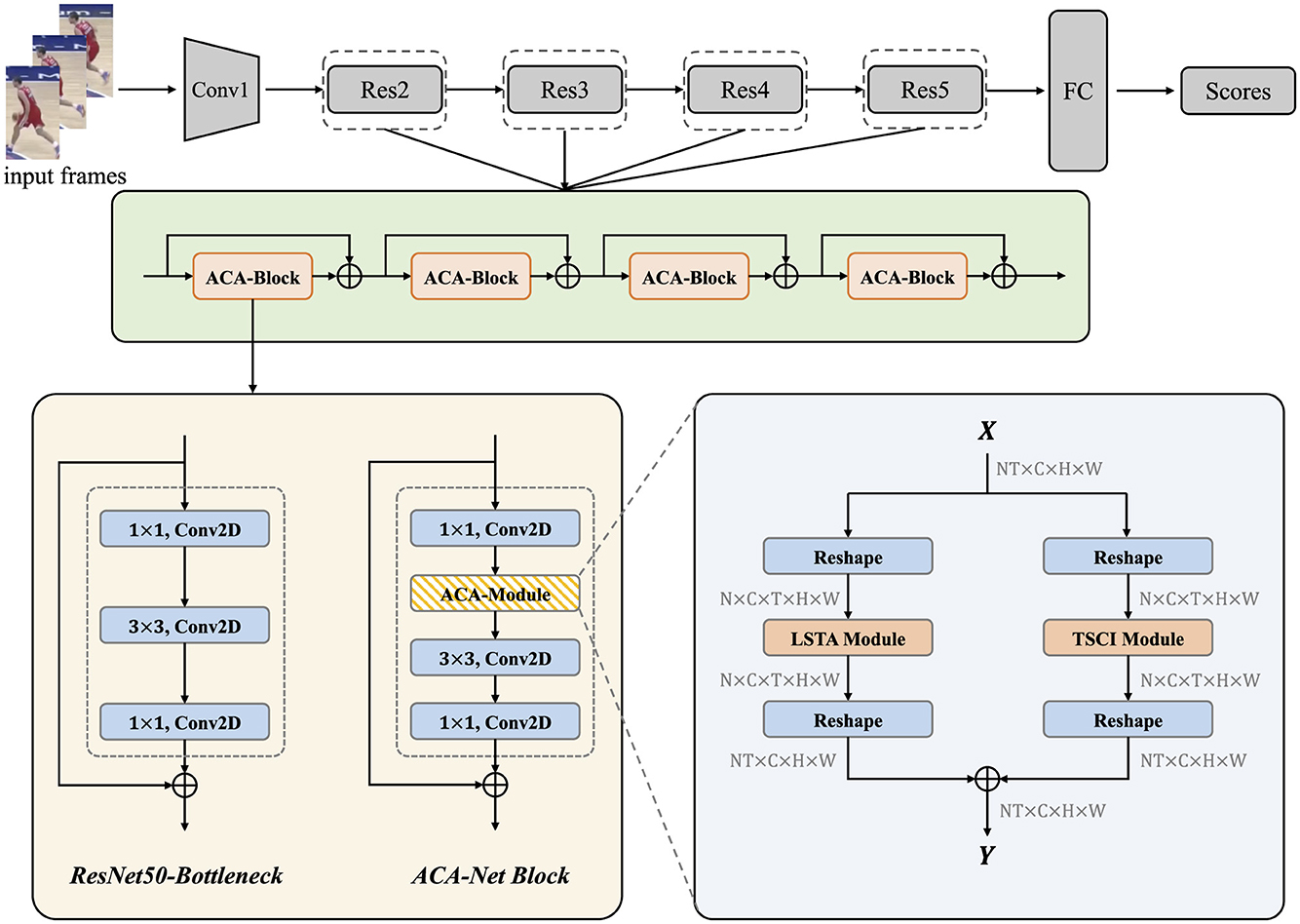

As shown in the Figure 1, we adopt ResNet50 as the backbone and insert the Adaptive Context-Aware Module (ACA-Module) after the first 1 × 1 convolution of each bottleneck of the layers in ResNet50. We call the improved bottleneck the ACA-Block. Each ACA-module in ACA-Block contains an LSTA module and a TSCI module to extract features from different aspects of the video. The LSTA module strengthens the model's performance in extracting the long-term dependency information of the video, and the TSCI module enhances the model's ability to extract discriminative features through the cross-dimensional interactions between the space and the channels. Next we will introduce the technical details of the LSTA module and the TSCI module.

Figure 1. The overall architecture of ACA-Net. The vanilla Bottlenecks in ResNet50 are replaced with ACA-Net Blocks to instantiate ACA-Net. The whole workflow of ACA-Module in the lower right shows how it works. We have noted the shape of tensor after each step. ⊕ denotes element-wise addition.

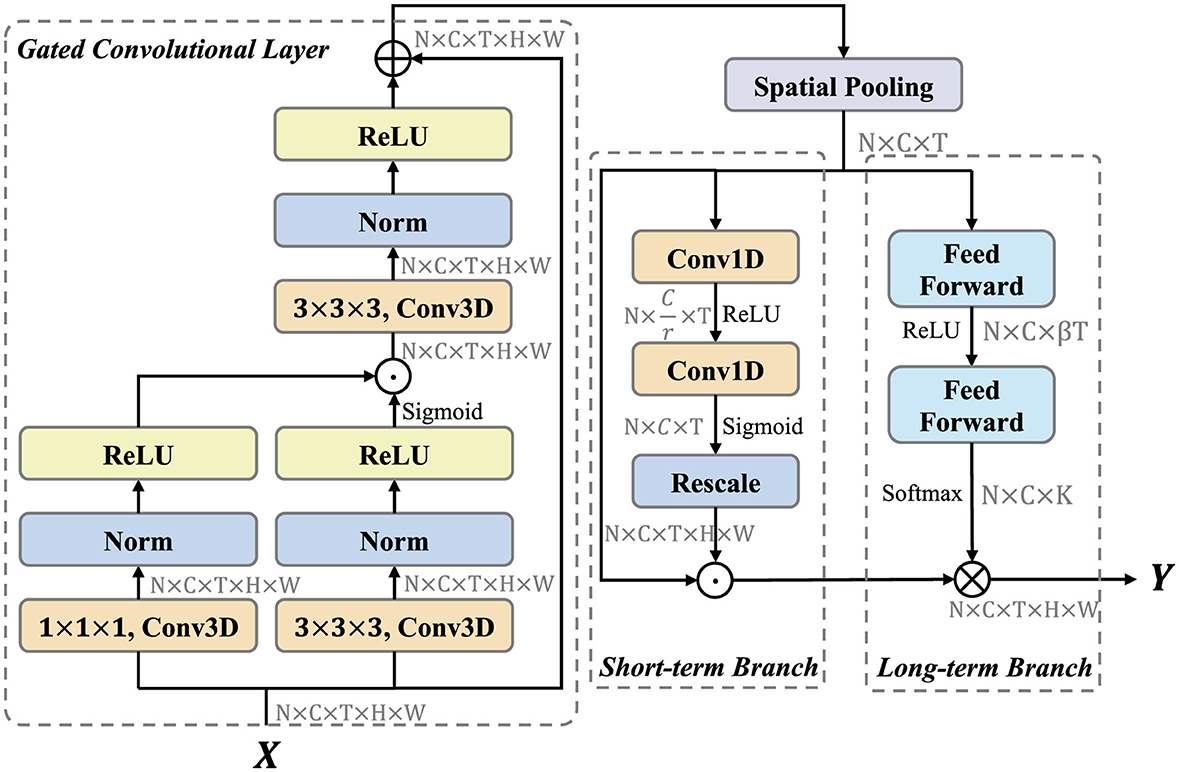

2.2 LSTA moduleThe LSTA module is designed to adaptively learn the long and short term information of basketball videos. As shown in the Figure 2, the LSTA module consists of three main parts, which are gated convolutional layer, short-term branch and long-term branch.

Figure 2. LSTA module architecture. LSTA module contains three parts: Gated Convolutioal Layer, Short-term branch, and Long-term Branch. We have noted the shape of tensor after each step. ⊕ denotes element-wise addition. ⊗ represents convolution operation. ⊙ is element-wise multiplication.

Formally, let X∈ℝC×T×H×W denotes the input feature map, where C indicates the number of channels, T represents the temporal dimension, and H, W are the spatial dimensions. For convenience, the dimension of batch size N is ignored here and in the following descriptions of shapes. First, we leverage gated convolutional layer to enhance the feature representation. The feature map X∈ℝC×T×H×W are fed into two convolution sublayers, which contains a convolution, a instance norm (Ulyanov et al., 2016), and a ReLU layer (Glorot et al., 2011). The convolution kernel size is 3 × 3 × 3 and 1 × 1 × 1, respectively. These two features are then multiplied pixel by pixel to control the information transfer, similar to the gate mechanism (Liu et al., 2021). This process can be formalized as

G=X⊕Conv3D3×3×3(Conv3D3×3×3(X)⊙Conv3D1×1×1(X)) (1)where X denotes the input feature map and C denotes the convolution block. Then, the output GC×T×H×W is fed into short-term and long-term branch. For efficiency, these two branches only focuses on temporal modeling. Therefore, We first use global spatial average pooling to compress the feature map as follows:

P=1H×W∑i,jGc,t,j,i, (2)where c, t, i, j denotes the index of channel, time, height and width dimensions. PC×T aggregates the spatial information of GC×T×H×W. The aim of short-term branch is to extract the short-term information and generate the location-related importance weights. To control the model complexity, the first Conv1D operation reduce the number of channels form C to Cr. The second Conv1D operation recover the number of channels to C, and yields an importance weights VC×T through the sigmoid function. Above process can be formulated as follows:

V=Frescale(σ(Conv1D(δ(Conv1D(P,Cr)),C))), (3)where P is the feature map form global spatial average pooling, δ and σ denote the ReLU and Sigmoid activation function, respectively. Frescale rescales V^C×T to VC×T×H×W by replicating in spatial dimension. Finally, the feature map P is multiplied element-wise with the importance weights V.

The Long-term Branch is mainly responsible for long-range temporal modeling and capturing long-range dependencies in videos. We generate dynamic temporal aggregation convolution kernels for the diversity of video temporal information and aggregate them in a convolutional manner. In order to simplify the generation of adaptive convolutional kernels and maintain a high inference efficiency, we adopt a channel-by-channel temporal sequence convolutional kernel generation method. Based on this idea, we expect that the generated adaptive convolution kernel only considers modeling temporal relationships, and the generation process of the convolution kernel is summarized as follows:

Θc=softmax(ℱ(W2,δ(ℱ(W1,P)))) (5)where Θc∈ℝK is the adaptive convolution kernel for the cth channel, K is the kernel size, δ denotes the ReLU activation function, ℱ is the fully connected feed forward layer, and W1 and W2 are the learnable parameters. Next, the generated adaptive kernel Θ = learns the temporal structure information between video frames in the convolution manner for temporal adaptive aggregation:

Y=Θ⊗Z=∑kKΘc,k·Zc,t+k,j,i (6)where ⊗ denotes channel-by-channel temporal convolution, Z is the feature map output from short-term branch.

In summary, LSTA module is capable of generating adaptive convolution kernels to aggregate short-term and long-term temporal features of the basketball video while maintaining computational efficiency.

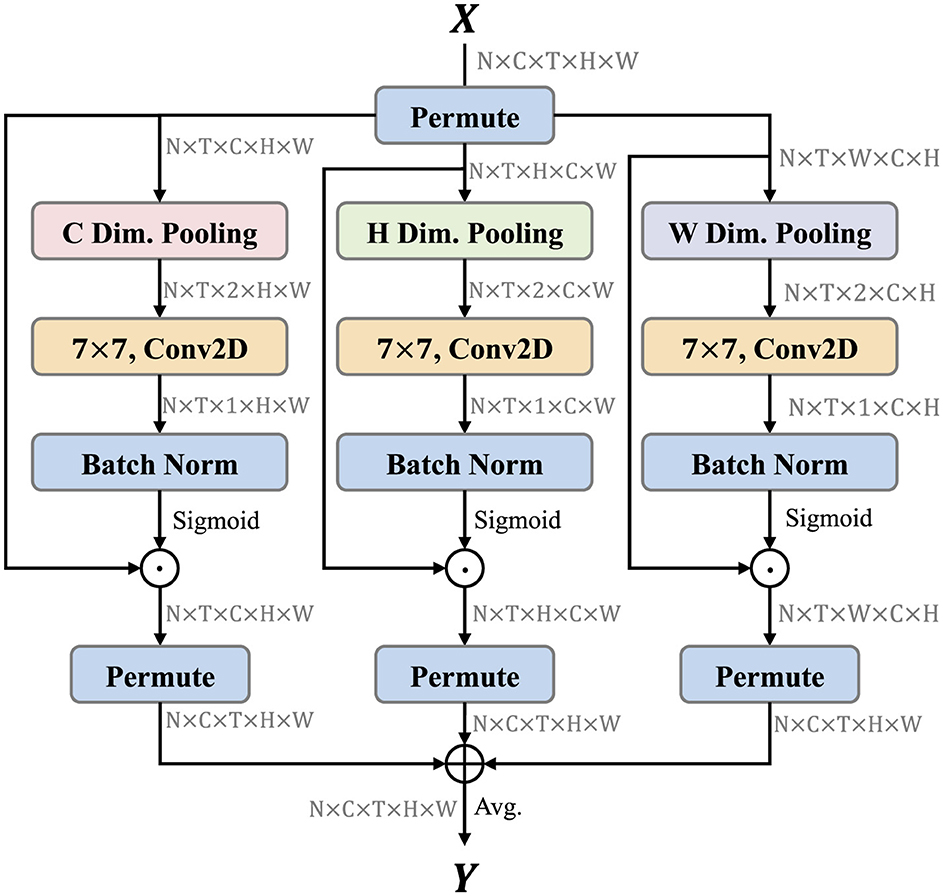

2.3 TSCI moduleTriplet attention (Misra et al., 2021) is a light-weight but effective attention mechanisms for computer vision tasks, such as image classification, which computes attention weights by capturing cross-dimension interaction using a three-branch structure. Inspired by this idea, we extend triplet attention to video and propose the TSCI module. The TSCI module builds inter-dimensional dependencies by the rotation operation followed by residual transformations and encodes inter-channel and spatial information with negligible computational overhead. The architecture of TSCI module is shown as Figure 3. The objective of the TSCI module is to enhance the model's ability to extract discriminative features through cross-dimensional interactions between three different dimensions, i.e., (C, H), (C, W), and (H, W), respectively.

Figure 3. TSCI module architecture. TSCI module contains three branches to focus different aspects of information. We have noted the shape of tensor after each step. ⊕ denotes element-wise addition, and ⊙ is element-wise multiplication.

Formally, let X∈ℝC×T×H×W denotes the input feature map, we first permute the spatial and channel dimensions. XC∈ℝT×C×H×W is obtained in the left branch after dimensional permuting. The global average pooling and global max pooling are performed along the direction C to obtain the feature map XC′∈ℝT×2×H×W, which can establish the information interaction between H and W. Similarly, XH∈ℝT×H×C×W is obtained in the middle branch after dimensional permuting, and global average pooling and global max pooling are performed along the direction H to obtain the feature map XH′∈ℝT×2×C×W, which can establish the information interaction between C and W. XW∈ℝT×W×C×H is obtained in the right branch after dimensional permuting, and global average pooling and global max pooling are performed along the direction W to obtain the feature map XW′∈ℝT×2×C×H, which can establish the information interaction between C and H. Above pooling process can be formulated as follows:

Dimensional Pooling(X)=Concat[Avgpool3d(X),Maxpool3d(X)] (7)Secondly, we leverage the features output from the above process to generate the attention map. Three two-dimensional convolution layers are performed for features XC′, XH′, and XW′, respectively. The attention map wC, wH, and wW are obtained through the batch normalization layer (Ioffe and Szegedy, 2015) and sigmoid activation function. Then, they are multiplied element-wise by the XC, XH, and XW of the corresponding branch to pay more attention to the region of interest.

Finally, the output feature maps of the three branches are averaged to achieve the aggregation of features. The whole process can be represented as follows:

Y=Avg(XC⊙σ(Conv2D(XC′))¯+XH⊙σ(Conv2D(XH′))¯ +XW⊙σ(Conv2D(XW′))¯) =13(XC⊙wC¯+XH⊙wH¯+XW⊙wW¯) (8)where wC∈ℝT×1×H×W, wH∈ℝT×1×C×W, and wW∈ℝT×1×C×H denote the attention map generated by the left, middle, and right branches. σ denotes the sigmoid activation function. ⊙ is the element-wise multiplication. XC⊙wC¯, XH⊙wH¯, and XW⊙wW¯ denote the execution of dimensional permuting operation on XC⊙wC, XH⊙wH, and XW⊙wW to retain the original input shape of X∈ℝC×T×H×W. Y∈ℝC×T×H×W denotes the final output of the TSCI module.

3 Experiment 3.1 DatasetsIn order to comprehensively evaluate the performance of various methods on basketball player action recognition, we adopted two popular basketball action recognition datasets to evaluate extensively. We will describe these two datasets in detail next.

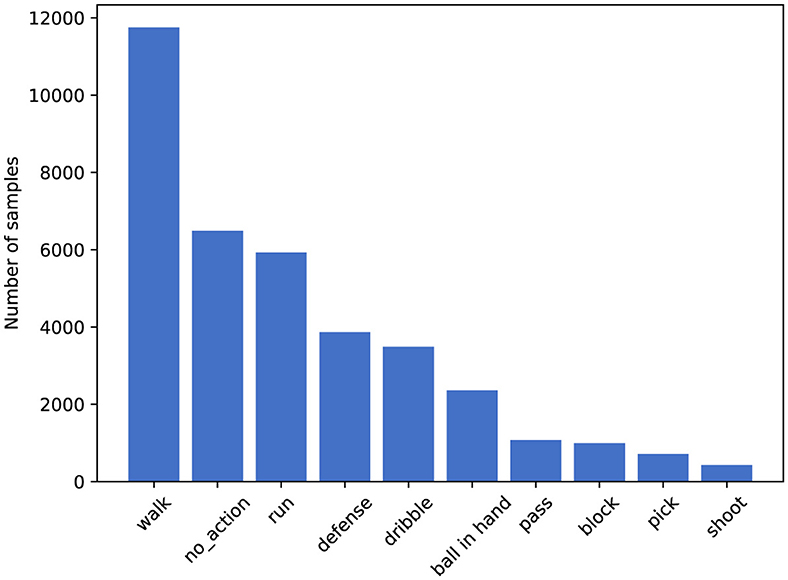

• SpaceJam (Francia et al., 2018). SpaceJam dataset is currently the most popular and accessible dataset available for the recognition of basketball players' movements. The dataset is divided into two parts: the first part consists of video clips in MP4 format, and the second part consists of the joint coordinates data of the athletes corresponding to each video. In this paper, we only utilize the video data from the first part. These videos originate from 15 segments of NBA championship and Italian championship basketball matches, with each video lasting 1.5 h. The dataset contains approximately 37,085 video clips in total, each comprising 16 frames, with each frame including three channels (RGB) and a resolution of 176 × 128 pixels. This dataset encompasses categories of 10 types of movements, namely: walk, no action, run, defense, dribble, ball in hand, pass, block, pick, and shoot. The distribution of data across different categories is illustrated in Figure 4.

• Basketball-51 (Shakya et al., 2021). The videos in the Basketball-51 dataset originate from third-person perspective shots taken during media broadcasts of 51 NBA basketball games. The dataset comprises a total of 10,311 video clips, each standardized to 25 FPS, and each frame includes three channels (RGB) with a resolution of 320 × 240. The dataset contains 8 categories, which are: two-point miss (2p0), two-point make (2p1), three-point miss (3p0), three-point make (3p1), free throw miss (ft0), free throw make (ft1), mid-range shot miss (mp0), and mid-range shot make (mp1). The distribution of data across these categories is shown in Figure 5.

Figure 4. Number of samples per class on SpaceJam dataset.

Figure 5. Number of samples per class on Basketball-51 dataset.

3.2 Evaluation metricsTo facilitate quantitative benchmarking of our and other methods, we select five standard metrics to assess the performance of various methods: accuracy, precision, recall, F1-Score, and AUC (area under the ROC curve). The formulas and variable explanations for each metric are as follows.

1. The formula of accuracy is shown in Equation 9:

Accuracy=TP+TNTP+TN+FP+FN (9)where TP indicates the number of true positives, TN represents the number of true negatives, FP is the number of false positives, and FN represents the number of false negatives.

2. The formula of precision is shown in Equation 10:

Precision=TPTP+FP (10)where TP indicates the number of true positives, and FP is the number of false positives.

3. The formula of recall is shown in Equation 11:

Recall=TPTP+FN (11)where TP indicates the number of true positives, and FN represents the number of false negatives.

4. The formula of F1-Score is shown in Equation 12:

F1-Score=2×Precision×RecallPrecision+Recall (12)where Precision and Recall are defined as Equations 10, 11.

5. The formula of Area Under Curve (AUC) is shown in Equation 13:

AUC=∫01ROC(x)dx (13)where ROC(x) represents the relationship between the true positive rate and the false positive rate when x is the threshold.

3.3 Experimental setupFor the SpaceJam dataset, we directly use the original number of frames and frame size of each video clip in the dataset, so the shape of the input is N×3 × T×176 × 128, where N is the batch size, T denotes the number of frames of each video clip, H and W denotes the height and width of each frame. In this work, N is set to 16, and T is 8. For the Basketball-51 dataset, the cropped frames are resized to 120 × 160 for training the networks. The number of frames of each video clip T is set to 50. For all datasets, 80% of the dataset is divided into a training set, 10% into a validating set and 10% into a testing set. For fairness, we used the unified dataset splitting strategy for all methods. We train all the models on the training set, while observing the loss function curves on the validation set to avoid model overfitting, and finally test the models on the test set for various types of metrics, as reported in the results in Section 3.4.

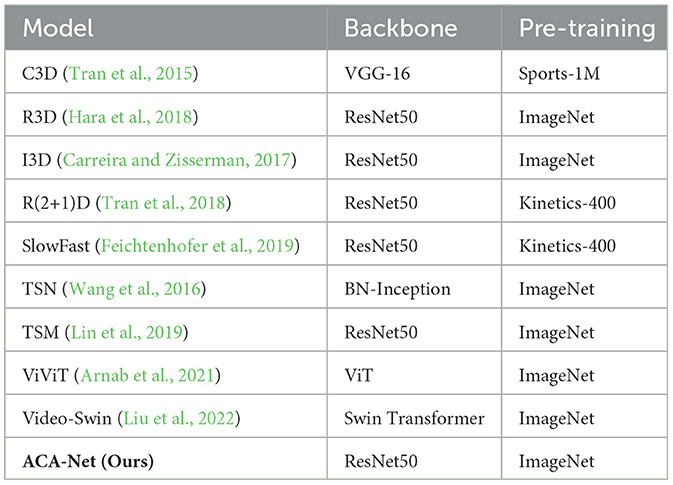

As show in Table 1, we show the details of the state-of-the-art models to be compared with in this work. Each model was implemented using the author's open-source code and initialized using its pre-trained weights. Then we trained each model on the SpaceJam and Basketball-51 datasets. Specifically, ACA-Net is initialized with pre-trained weights on ImageNet to speed up the convergence of the network. For all experiments, we initially set the number of epochs to 30, with early stopping based on validation loss to prevent overfitting. The batch size is set to 16, and the learning rate is set at 0.0001. The total number of parameters in the model is approximately 26.8 million. We use the Adam optimizer (Kingma and Ba, 2014) for training on 8 NVIDIA GeForce RTX 3090 GPUs.

Table 1. The details of the state-of-the-art models.

3.4 Experimental results and analysis 3.4.1 Classification resultsIn this section, we compared ACA-Net with the current mainstream action recognition methods on SpaceJam and Basketball-51 datasets to validate the effectiveness of the proposed method. As illustrated in Table 2, we present the experiment results conducted on SpaceJam dataset, comparing various state-of-the-art methods across key performance metrics. In this analysis, we evaluated accuracy, precision, recall, F1-Score, and AUC to comprehensively assess the efficiency and effectiveness of each method.

Comments (0)