Object detection remains a cornerstone task in robotic vision, with the primary objective of accurately identifying and localizing objects within an image. A variety of approaches have emerged over the years, including the influential R-CNN family and its subsequent variations (Ren et al., 2015; Zhang et al., 2020). For example, FoveaBox (Kong et al., 2020) offers an anchor-free detection framework leveraging a multi-level feature pyramid to achieve high-quality results across different scales. Meanwhile, Soft-NMS (Bodla et al., 2017) introduces an advanced non-maximum suppression technique that adjusts detection scores in densely populated scenes, thereby enhancing accuracy. Traditional methods generally rely on one-to-many label assignment strategies, where multiple predictions are mapped to each ground truth box, often through proposals, anchors, or window centers. Despite their successes, these methods tend to rely heavily on complex, manually designed components such as non-maximum suppression (NMS) and anchor generation, which can lead to inefficiencies and inherent limitations in adaptability.

The advent of the Detection Transformer (DETR) (Carion et al., 2020) marked a significant shift in the object detection landscape by redefining the task as a set prediction problem, thereby dispensing with the need for traditional components such as NMS and anchors. By utilizing a transformer-based encoder-decoder architecture (Vaswani et al., 2017) and employing a one-to-one matching strategy through the Hungarian algorithm (Kuhn, 1955), DETR enables direct end-to-end optimization, simplifying the detection process. However, despite these innovations, DETR's adoption has been limited by issues such as slow convergence and the inherent challenges of its one-to-one matching strategy, which often leads to sparse supervision signals during training.

To mitigate the issues inherent in the original DETR framework, various enhancements have been developed over time. For instance, REGO (Chen Z. et al., 2022) enhances small object detection through optimized feature representation for specific regions. Salience-DETR (Hou et al., 2024) increases accuracy by emphasizing salient objects in images. Additionally, SMCA (Gao et al., 2021) employs a spatially modulated cross-attention mechanism to refine localization, and Sparse-DETR (Roh et al., 2022) introduces a sparse sampling strategy to reduce computational load, making it more suitable for real-time applications. Methods like UP-DETR (Dai et al., 2022) leverage unsupervised pre-training to improve performance in data-scarce environments, and WB-DETR (Liu F. et al., 2021) simplifies detection by removing the CNN backbone, relying instead on a pure Transformer-based architecture. Dynamic DETR (Dai et al., 2021) enhances flexibility through dynamic attention mechanisms, and Efficient DETR (Yao et al., 2021) reduces model complexity by optimizing resource usage. Together, these methods contribute to refining the DETR architecture by enhancing training efficiency, detection accuracy, and adaptability across diverse object detection tasks.

Recent advances demonstrate the effectiveness of enhancing feature learning and improving detection accuracy for boosting object detection performance. Co-DETR (Zong et al., 2023) decouples object query assignments and uses auxiliary queries for broader feature capture, while Group DETR (Chen et al., 2023) and NMS DETR (Ouyang-Zhang et al., 2022) employ one-to-many label assignments, with the latter integrating non-maximum suppression to refine outcomes. DN-DETR (Li et al., 2022) introduces denoising to stabilize training, a concept further optimized by DINO's (Zhang et al., 2023) contrastive learning approach. Additionally, in real-time detection, DIoU and CIoU losses (Zheng et al., 2020) have emerged, improving bounding box accuracy by addressing limitations in traditional IoU metrics through enhanced convergence speed and regression precision.

Previous methods have struggled to achieve effective one-to-many matching, while NAN-DETR (Noising multi-ANchor DEtection TRansformer) addresses these challenges through a series of novel improvements. The architecture of NAN-DETR consists of a backbone network, a multi-layer transformer encoder, several multi-layer transformer decoders, and multiple prediction heads. A key innovation is the multi-anchor strategy based on decoders, where multiple independent decoders refine the initial anchors generated by the encoder, thereby improving detection accuracy. Additionally, the introduction of a concentrated noise mechanism in the decoders minimizes conflicts between anchor boxes, further enhancing the robustness. Similar to DETR, the matching process employs the “Complete Intersection over Union” (CIoU) loss function to enhance anchor box similarity and optimize detection results. The combination of these innovative techniques significantly improves object detection accuracy, particularly in terms of Average Precision (AP) for objects of various sizes, distinguishing NAN-DETR from other DETR variants.

Our contributions can be summarized as follows:

• We present a new end-to-end DETR-type model with a centralization noising multi-anchor strategy, achieving high-accuracy object detection.

• We propose the Decoder-based multi-anchor strategy to enhance object detection accuracy and the centralization noising mechanism to reduce conflicts between different anchors. In addition, we employ the complete intersection over union (CIoU) loss to improve the precise measurement of similarity between anchors.

• We validate the effectiveness of NAN-DETR through comprehensive experiments on the COCO dataset, where our model, using ResNet-50 as the backbone, achieves an average precision (AP) of 50.1%, outperforming existing state-of-the-art methods.

The structure of this paper is as follows: In Section 2, we review the existing literature on object detection, focusing on the progress made in set matching, anchor-based techniques, and label assignment strategies. Section 3 delves into the fundamental aspects of the DETR framework and details the key innovations introduced in NAN-DETR, such as the decoder-based multi-anchor strategy and the centralization noising mechanism. Section 4 presents the experimental results, showcasing how NAN-DETR outperforms other DETR variants on the COCO dataset in terms of performance. Finally, Section 5 concludes the paper by underscoring the improvements NAN-DETR brings to detection accuracy and offering suggestions for future research.

2 Related worksThis section reviews key developments in transformer-based object detection, particularly focusing on the DETR framework and various improvement strategies.

2.1 One-to-one set matchingDETR (Carion et al., 2020) introduced a significant shift in object detection by framing the task as a set prediction problem, utilizing a transformer architecture. This method employs a one-to-one matching strategy based on the Hungarian algorithm, enabling direct end-to-end training without the need for conventional components like non-maximum suppression (NMS). However, this one-to-one matching approach often results in sparse supervision signals and slower convergence rates during training, which can limit its effectiveness. To address these challenges, DN-DETR (Li et al., 2022) incorporated a denoising technique during the training process, which helps stabilize the matching process and speeds up convergence. By introducing noise into the training queries, DN-DETR mitigates the issues caused by sparse positive samples. Furthermore, Conditional DETR (Meng et al., 2021; Chen X. et al., 2022) enhances this approach by refining the query mechanism, leading to improved efficiency in model training and faster convergence, ultimately boosting detection accuracy.

2.2 Anchor matchingAlthough DETR initially removed the reliance on anchors, subsequent studies have shown that reintroducing anchors can significantly enhance performance. Approaches such as Anchor DETR (Wang et al., 2022) and DAB-DETR (Liu S. et al., 2022) reintegrate anchor boxes within the DETR framework, which not only makes the query process more interpretable but also accelerates convergence by narrowing the search space. By anchoring queries closer to likely object locations, these methods reduce computational complexity and improve overall model efficiency. DINO (Zhang et al., 2023) further refines this anchor-based strategy by integrating advanced denoising methods and contrastive learning, particularly enhancing detection accuracy in more complex scenarios. These developments highlight the effectiveness of merging traditional object detection techniques with transformer-based models, offering a pathway to superior detection performance.

2.3 One-to-many label assignmentTraditional object detection methods, including those in the R-CNN family (Ren et al., 2015; Zhang et al., 2020), typically utilize a one-to-many label assignment strategy, where multiple predictions correspond to each ground truth box. This concept has been effectively adapted into transformer-based models. For example, Group-DETR (Chen et al., 2023) employs a group-wise one-to-many assignment, allowing multiple queries to align with each ground truth box. This strategy strengthens the model's feature learning and attention mechanisms, resulting in improved detection performance. Co-DETR (Zong et al., 2023) further expands on this by incorporating flexible assignments through auxiliary heads like ATSS and Faster R-CNN, which enhance supervision and significantly boost accuracy, especially in densely populated scenes.

2.4 IoUIntersection over Union (IoU) is a critical metric in computer vision, extensively used to assess the accuracy of object detection and segmentation models by measuring the overlap between predicted and ground truth bounding boxes. Although IoU became widely recognized through its application in the R-CNN framework (Girshick et al., 2014), it presents challenges, particularly when dealing with non-overlapping boxes. To overcome these limitations, Generalized IoU (GIoU) (Rezatofighi et al., 2019) was introduced, adding the concept of the smallest enclosing box to provide a more holistic measure. Further improvements include Distance-IoU (DIoU) and Complete-IoU (CIoU) (Zheng et al., 2020), which factor in the distance between box centers and aspect ratio, respectively, enhancing localization accuracy and convergence speed.

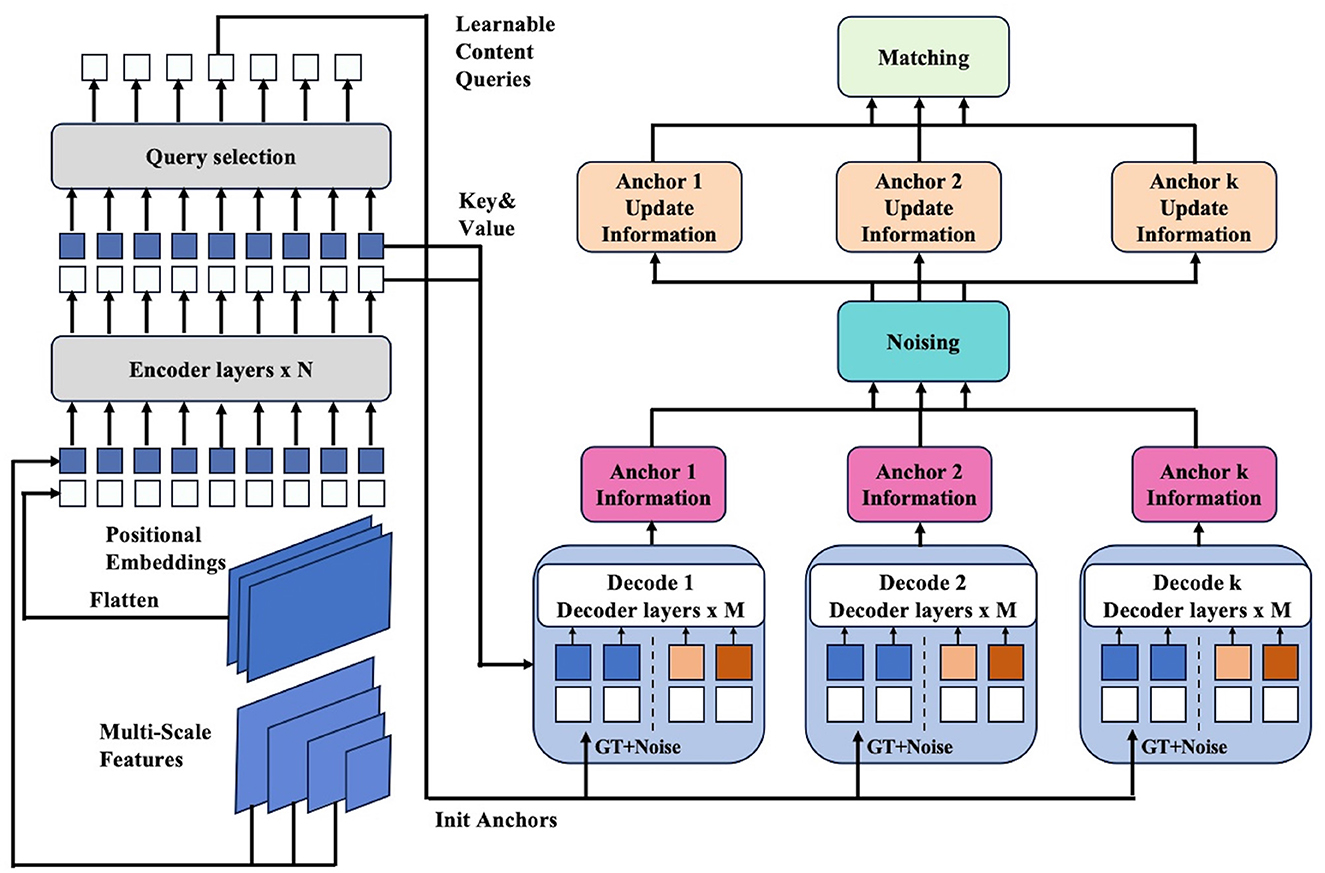

3 Methodology 3.1 Model overviewNAN-DETR enhances the DETR (Carion et al., 2020) framework with several key innovations aimed at boosting detection accuracy. The architecture includes a backbone network, a Transformer encoder, multiple Transformer decoders, and prediction heads that output the final detection results as Figure 1. The process starts by feeding the image into a backbone such as ResNet (He et al., 2016) or Swin-Transformer (Liu Z. et al., 2021, 2022), which extracts global features. These features, combined with positional embeddings to capture spatial relationships, are then processed by the Transformer encoder, dividing the image into multiple regions (queries). Details of the image feature extraction process can be obtained in Section 3.2. Each query is used to generate an initial anchor box through a neural network. These anchor boxes are then locally refined by k independent decoders to better detect the object. This strategy is called the decoder-based multi-anchor strategy, whose details are available in Section 3.3. To reduce conflicts between multiple anchor boxes, they are perturbed after being calculated, which is called as centralization noising mechanism presented in Section 3.4. Finally, the matching process is similar to DETR, but with CIoU (Zheng et al., 2020) introduced to improve the precise measurement of similarity between anchors and optimize the detection results, which is described in Section 3.5.

Figure 1. Framework of the proposed NAN-DETR. The enhancements primarily focus on the Transformer decoder. We use k decoders to acquire more anchors and reduce the conflicts between multi-anchor by centralization noising mechanism. Finally, we use CIoU loss to calculate the loss function of boxes in matching.

3.2 Image feature extractionGiven an image, we can obtain the visual feature knowledge through a visual backbone. In order to acquire different scale image information, we use multi-scale detection to extract multi-scale visual features. Meanwhile, as the position relationships between different regions in the image are highly important, we introduce position embedding to ensure that the position information of different regions can be captured by the model. The process of image feature extraction is as follows:

where v represents the input of the Transformer encoder, B indicates the backbone, such as ResNet-50 (He et al., 2016) or Swin Transformer (Liu Z. et al., 2021, 2022) architecture, and vpos denotes the sinusoidal position embedding. ResNet-50 uses convolution and residual connections, excelling at extracting local features with high computational efficiency, while Swin Transformer is based on self-attention, capturing both global and local information, making it suitable for complex vision tasks but with higher computational cost.

Next, we add the image features of the position embedding into the Transformer Encoder for attention interaction to get image features. Then, multiple query anchor frames for each object in the image are obtained as input to the Transformer decoder in the full connection layer.

3.3 Decoder-based multi-anchor strategyThe decoder-based multi-anchor strategy can alleviate the limitations of the initial DETR framework. In the DETR architecture, the encoder functions similarly to a standard Transformer encoder, producing abstract information that effectively divides the image into several regions, referred to as queries. To enhance object detection within these query regions, we introduce a neural network layer that generates four-dimensional vectors corresponding to anchor boxes (Wang et al., 2022; Zhang et al., 2023). These vectors, considered as initial anchor boxes, provide preliminary spatial information that indicates potential object locations.

However, a single anchor box often fails to adequately represent larger objects, leading to convergence challenges and difficulties in model training. While some ideas have been proposed in prior works, such as Group DETR (Chen et al., 2023) and Co-DETR (Zong et al., 2023), their research has all introduced a one-to-many matching approach. But our implementation diverges in its approach and takes some advantages over them. Group DETR employs arbitrary grouping, which does not fully leverage the information from the encoder, whereas Co-DETR relies heavily on auxiliary heads, leading to redundancy.

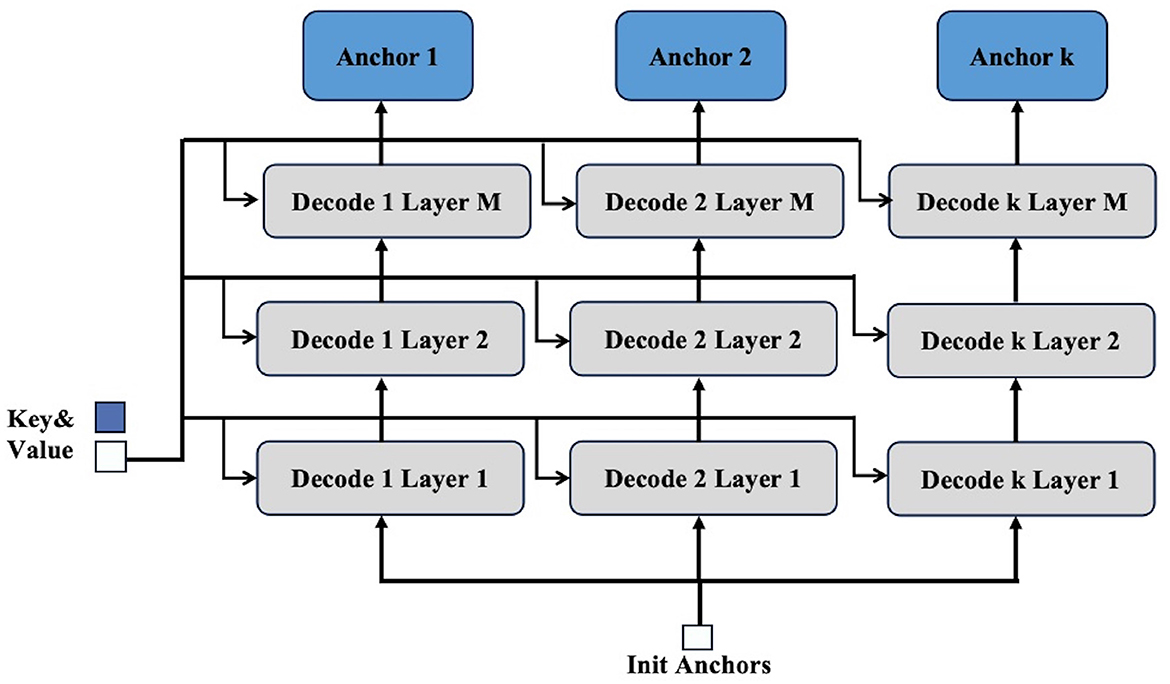

To address these issues, our proposed strategy utilizes multiple decoders, denoted as k decoders, to process each query independently as Figure 2. Each decoder refines the initial anchor box, resulting in multiple predicted positions for anchor boxes. This one-to-many assignment effectively increases the likelihood of accurately capturing objects of varying scales within the image. Compared with Group DETR and Co-DETR, our strategy circumvents these limitations by refining anchor boxes through a more targeted and efficient process, ensuring better utilization of the encoder's output.

Figure 2. Details of decoder-based multi-anchor strategy. The process of this strategy mainly involves first creating k decoders. The initial anchor produced by the encoder is then sent to each decoder, while the key and value outputs from the encoder are provided to each layer of the decoders for processing. This allows a single query to obtain k anchors, thereby achieving a one-to-many effect.

3.4 Centralization noising mechanismUpon generating multiple anchor boxes for each query, a significant challenge emerges due to potential conflicts between the outputs of auxiliary heads, as noted in the Co-DETR study (Zong et al., 2023). For instance, consider a scenario where an object, such as a square, is initially represented by four anchor boxes centered within it. If these anchors are perturbed randomly, they may shift toward the four corners of the square. This perturbation can cause the anchors to lose sight of the object as a whole, with some potentially deviating completely from the square's boundaries. This phenomenon illustrates that different anchors may capture disparate and sometimes conflicting information about the same object, particularly when dealing with large objects. If these conflicts among the anchors are not effectively managed, the cumulative information they provide could become inconsistent or misleading, undermining the overall detection accuracy. This issue is akin to the aforementioned square example, where the anchors' divergence leads to incomplete or erroneous object representation.

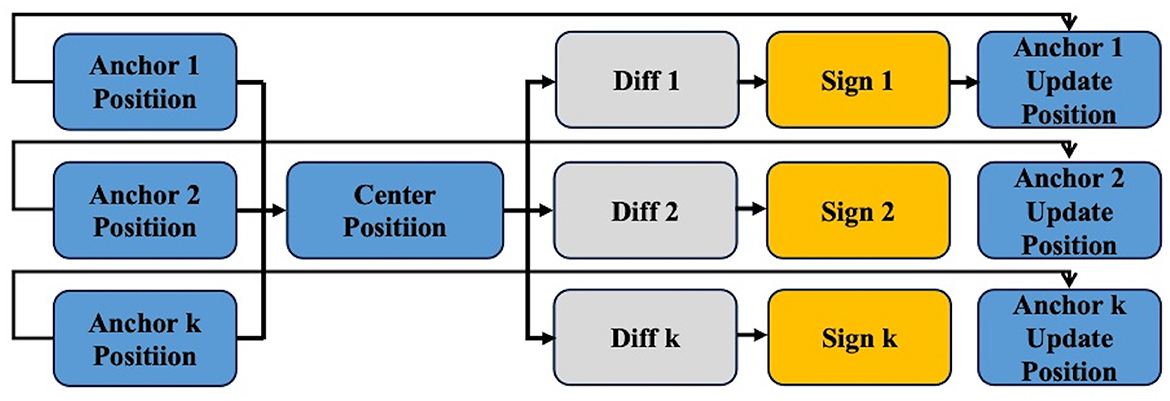

To avoid this problem, we perturb these k anchor boxes. After calculating the center of these k anchor boxes, we apply random noise to them, causing them to move a certain distance toward the center. This step minimizes conflicts and merges the detection information from multiple boxes. As a result, we obtain k anchor boxes that influence each other and incorporate the possibilities of transformations. These k×query number anchor boxes will then be used for matching, as Figure 3, following the same matching process as in DETR.

Figure 3. Details of centralization noising mechanism. The main process of the centralization noising mechanism first involves finding the centroid of all obtained anchors. Then, the difference (diff) for each anchor is calculated to determine the direction and magnitude of movement (noise). Finally, the updated anchors are derived by combining the sign with the original anchors.

Specifically, the centralization noising process is defined as follows: Given a set of detection boxes along with(xi+ui2,yi+di2) as their center, there exists a line Ax+By+C = 0, where both (xi+ui2,yi+di2) and (∑i=1kxi+ui2k,∑i=1kyi+di2k) lie on this line, thus we can denote A, B, C as

A=∑i=1kyi+di2k-yi+di2 (2) B=∑i=1kxi+ui2k-xi+ui2 (3) C=(∑i=1kxi+ui)(yi+di)4k-(∑i=1kyi+di)(xi+ui)4k. (4)Moreover, the random noise is set to Δ~N(0, σ(w+h)), where w, h denote the width and height of the box respectively, and σ denotes the standard derivation. Then the actual center of the detection box becomes

Centeri=(xi+ui2+sign(i)BΔ,yi+di2-sign(i)AΔ), (5)where sign(i)={1(∑i=1kxi+ui2k-xi+ui2)BΔ<0-1otherwise.

3.5 Complete Intersection over Union (CIoU) lossIntersection over Union (IoU) is a widely used metric in object detection, primarily measuring the overlap between predicted and ground truth bounding boxes. However, IoU has notable limitations, such as failing to provide useful gradient information when boxes do not overlap and not fully accounting for variations in overlap due to translation or rotation. To address these shortcomings, we employ Complete Intersection over Union (CIoU) loss (Zheng et al., 2020), which has shown success in models such as YOLO (Zhao et al., 2024; Redmon et al., 2016). CIoU extends the basic IoU by incorporating the aspect ratio and the distance between the centers of bounding boxes, providing a more comprehensive assessment of similarity. In NAN-DETR, we replace the traditional IoU with CIoU, leveraging its ability to improve the precision of bounding box predictions during training, which is particularly beneficial for high-precision object detection tasks.

Specifically, The CIoU loss is defined as:

LCIoU=1-IoU(b,bgt)+ρ2(b,bgt)c2+αv (6)where:

• IoU(b, bgt) is the Intersection over Union between the predicted box b and the ground truth box bgt,

• ρ(b, bgt) is the Euclidean distance between the centers of the two boxes,

• c is the diagonal length of the smallest enclosing box for both boxes,

• α is a weight that balances the aspect ratio consistency v, which measures the consistency of the aspect ratios of the predicted box and the ground truth box.

NAN-DETR utilizes a composite loss function that integrates the Hungarian matching loss for query-object assignment and the CIoU loss for bounding box regression. The overall loss L is given by:

L=λ1LHungarian+λ2LBox+λ3LCIoU (7)where LHungarian is the Hungarian matching loss (Carion et al., 2020; Kuhn, 1955), LBox denotes the ℓ1-distance of predicted box and matched box, and λ1, λ2, λ3 are hyperparameters that balance the two components of the loss function.

4 Experiments 4.1 Setup 4.1.1 Datasets and evaluation metricsTo assess the performance of NAN-DETR, we conducted evaluations using the COCO dataset (Lin et al., 2015), a comprehensive benchmark widely adopted in object detection research. The dataset encompasses 80 object categories and over 200,000 labeled images. The val subset represents the detection results that we report. The main evaluation metric is Average Precision (AP), which measures the area under the precision-recall curve averaged across all categories. Specific metrics, such as AP50 and AP75, correspond to the Average Precision when the IoU threshold is 0.5 and 0.75, respectively. In addition, the APS, APM, and APL metrics evaluate the performance for different object sizes (small, medium, and large), providing a deep insight into the ability of NAN-DETR to tackle different detection challenges.

4.1.2 Implementation detailsNAN-DETR is implemented using PyTorch and trained on a setup comprising 8 NVIDIA A100 GPUs. We utilize the AdamW optimizer with a base learning rate of 10−4 and a lower learning rate of 10−5 for the backbone. The model training involves clipping the maximum gradient norm at 0.1, with a positional encoding temperature set to 20. Both the encoder and decoder are composed of 6 layers, each with a feedforward dimension of 2048 and a hidden dimension of 256, without applying dropout. The model operates with 8 attention heads and processes 900 queries, each comprising 4 points in both the encoder and decoder. ReLU serves as the activation function, and FrozenBatchNorm2d is used for batch normalization. The model's cost settings include values of 2.0 for class prediction, 5.0 for bounding boxes, and 2.0 for CIoU. We set the classification loss coefficient to 1.0, with bounding box and CIoU loss coefficients at 5.0 and 2.0, respectively. Additionally, the focal loss alpha parameter is 0.25, and the noise parameter σ is fixed at 0.05. These hyperparameters were fine-tuned based on extensive experimentation to optimize model performance.

4.2 Baseline methodsWe compare NAN-DETR with various state-of-the-art DETR variants:

• Conditional-DETR (Meng et al., 2021; Chen X. et al., 2022): Conditional-DETR introduces key methodological improvements over DETR, primarily by enhancing the query mechanism, decoder module, and matching strategy.

• Anchor-DETR (Wang et al., 2022): Anchor-DETR brings significant enhancements to the original DETR by incorporating object queries that are designed around anchor points, a concept widely utilized in CNN-based detectors.

• DAB-DETR (Liu S. et al., 2022): DAB-DETR enhances the original DETR by introducing dynamic anchor boxes as queries, with both their position and size being dynamically adjusted layer by layer.

• AdaMixer (Gao et al., 2022): Compared with DETR, AdaMixer features adaptive 3D feature sampling, where queries dynamically sample features across different spatial and scale dimensions.

• Deformable-DETR (Zhu et al., 2021): Deformable-DETR advances the original DETR by incorporating a deformable attention module that focuses on a limited set of key sampling points near a reference point, rather than considering all spatial locations.

• DN-Deformable-DETR (Li et al., 2022): DN-Deformable-DETR enhances the original DETR by implementing a denoising training technique that stabilizes the bipartite graph matching process, which is often unstable during the early stages of training. This technique involves inputting noisy ground truth bounding boxes into the transformer decoder and training the model to accurately reconstruct the original boxes, thereby speeding up convergence and boosting overall model performance.

• H-Deformable-DETR (Jia et al., 2023): H-Deformable-DETR enhances the original DETR by introducing a hybrid matching strategy, which integrates one-to-one matching with an additional one-to-many matching branch during the training process.

• DINO-Deformable-DETR (Zhang et al., 2023): DINO improves upon DETR by introducing several key advancements: a contrastive denoising training method to handle noisy data, a mixed query selection strategy to better initialize queries, and a “look forward twice” scheme that enhances the box prediction process by refining parameters from both the current and subsequent layers.

• Co-Deformable-DETR (Zong et al., 2023): Co-Deformable-DETR improves upon DETR by introducing a collaborative hybrid assignment training scheme with auxiliary heads that combines one-to-one and one-to-many label assignments.

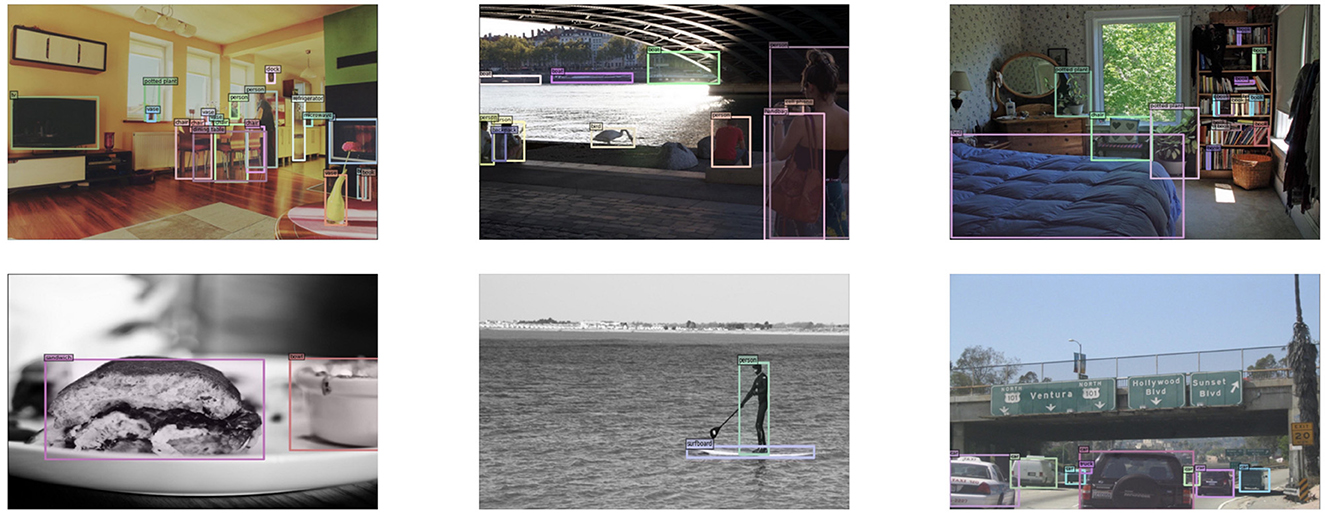

4.3 Main resultsOur method performs well on the COCO dataset, and the specific visualization results are shown in Figure 4.

Figure 4. Visualizations of COCO dataset by NAN-DETR with ResNet-50.

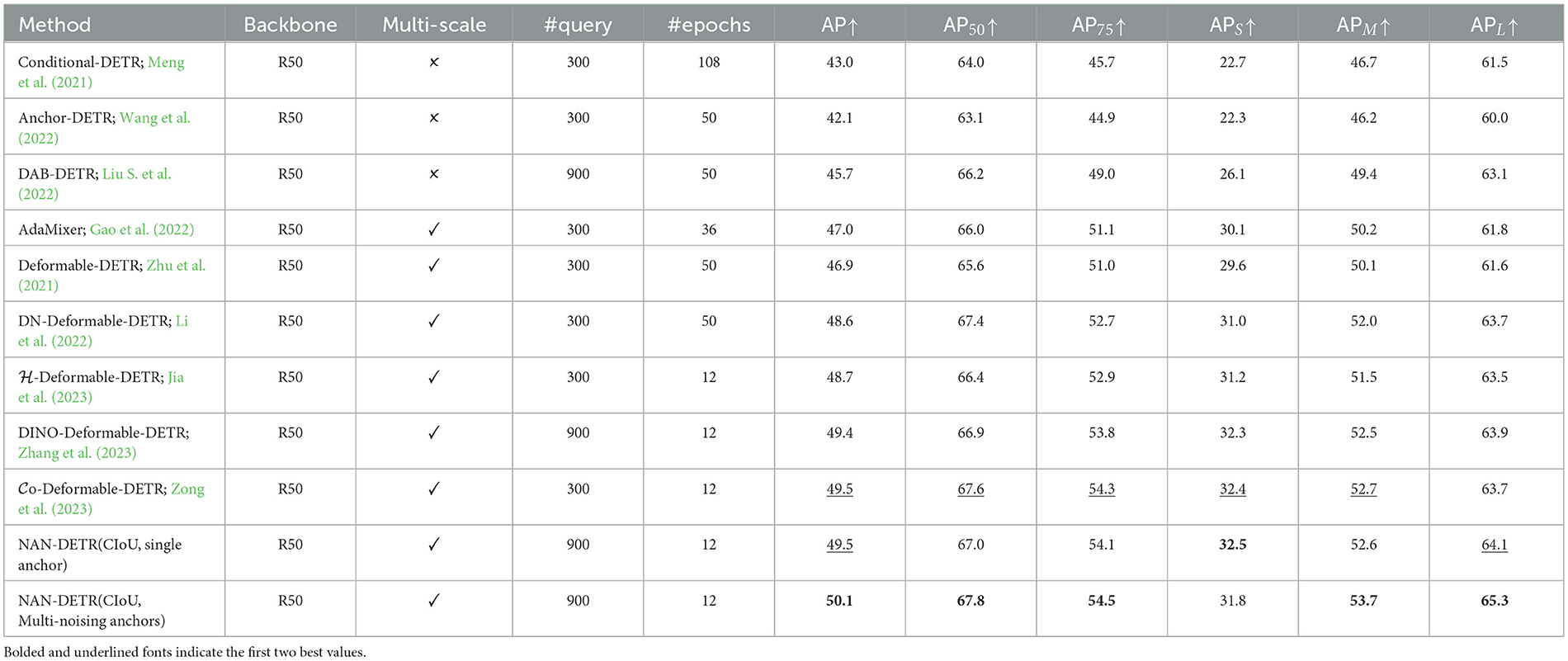

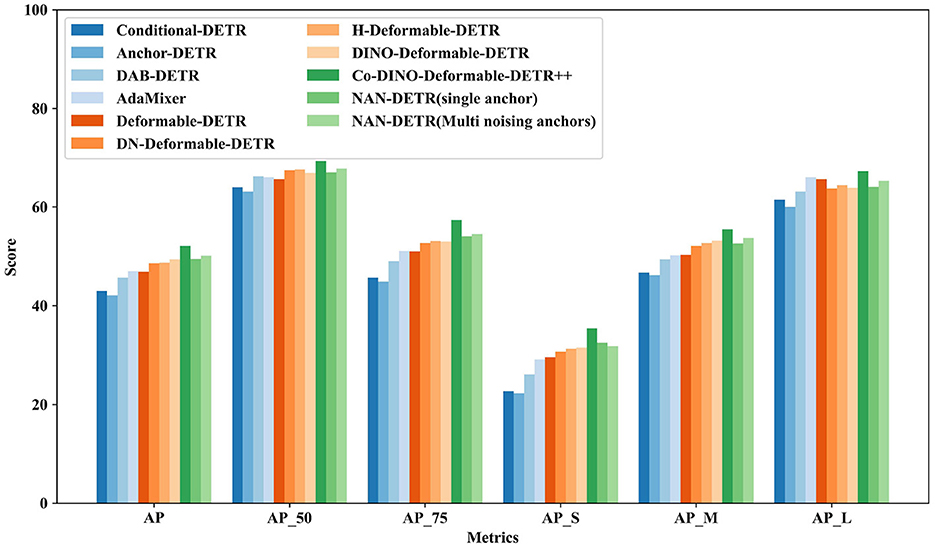

4.3.1 ResNet-50 backboneThe performance of various DETR variants using the ResNet-50 backbone in Table 1 and Figure 5 demonstrates significant differences in object detection capabilities. Methods such as Conditional-DETR (Meng et al., 2021; Chen X. et al., 2022) and Anchor-DETR (Wang et al., 2022), which reintroduce anchor boxes and conditional queries, show moderate performance with AP scores of 43.0% and 42.1%, respectively. These methods improve query interpretability but still lag behind more advanced models. DAB-DETR (Liu S. et al., 2022) and AdaMixer (Gao et al., 2022), incorporating dynamic anchor boxes and adaptive mixing strategies, achieve better AP scores of 45.7% and 47.0%, respectively, showing enhanced query formulation and faster convergence.

Table 1. Comparison to the baselines on COCO val with ResNet-50.

Figure 5. Comparison to the baselines DETR variants on COCO val with ResNet-50.

More advanced methods like DN-Deformable-DETR (Li et al., 2022) and H-Deformable-DETR (Jia et al., 2023) leverage deformable attention modules and denoising techniques, resulting in higher AP scores of 48.6% and 48.7%, respectively. DINO-Deformable-DETR (Zhang et al., 2023) further improves upon these techniques, achieving an AP of 49.4%. Co-Deformable-DETR (Zong et al., 2023) also demonstrates strong performance with an AP of 49.5%, showcasing the effectiveness of collaborative hybrid assignments and the importance of detection heads.

However, the top performer in this category is NAN-DETR with multi-noising anchors, achieving an impressive AP of 50.1%. This method outperforms all other DETR variants, demonstrating significant improvements in detection precision. The CIoU loss also enhances bounding box predictions, contributing to higher AP75 scores. As shown in Figure 7, while there is a slight decrease in performance for small objects, NAN-DETR consistently excels in detecting medium and large objects, making it a versatile and robust solution for various object detection tasks to resolve multiple anchors conflicts. From the results of APS and APL, the centralization noising mechanism performs well and indeed achieves excellent results in large object detection.

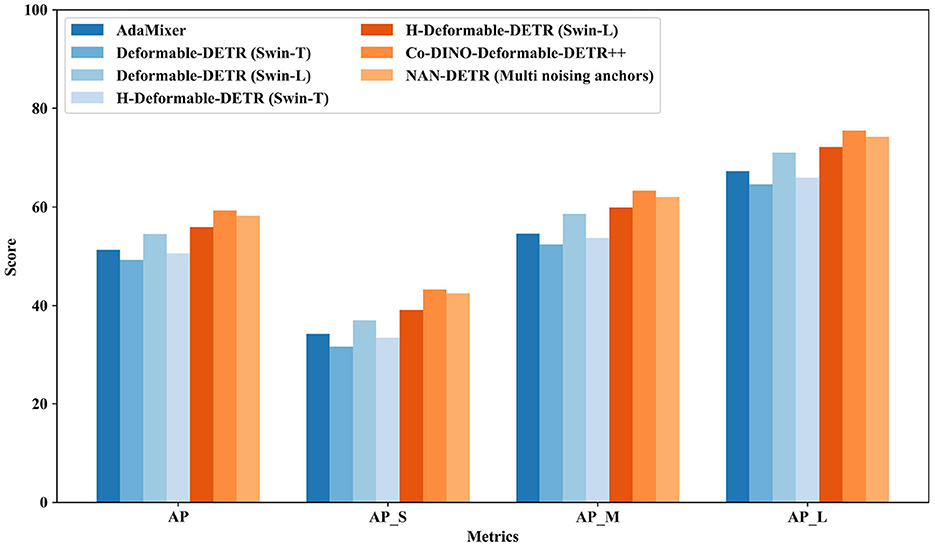

4.3.2 Swin backboneThe performance with the Swin backbone in Table 2 and Figure 6 shows considerable improvements, particularly for models utilizing advanced features of the Swin transformers. Methods like AdaMixer (Gao et al., 2022) with Swin-S backbone achieve an AP of 51.3%, showing good performance with adaptive mixing. Deformable-DETR (Zhu et al., 2021) with Swin-T and Swin-L backbones show significant improvements with AP scores of 49.3% and 54.5%, respectively, leveraging deformable attention modules to enhance detection accuracy.

Table 2. Comparison to the baselines on COCO val with Swin.

Figure 6. Comparison to the baselines DETR variants on COCO val with Swin.

H-Deformable-DETR (Jia et al., 2023) continues this trend with Swin-T and Swin-L backbones, achieving AP scores of 50.6% and 55.9%, respectively. Co-Deformable-DETR (Zong et al., 2023) with the Swin-L backbone achieves a strong AP of 58.5%, showcasing the effectiveness of collaborative hybrid assignments and the importance of detection heads. This method also achieves the highest APM of 62.4%, indicating superior performance in detecting medium-sized objects.

NAN-DETR with the Swin-L backbone, on the other hand, achieves an impressive AP of 58.2%, very close to Co-Deformable-DETR. However, it outperforms all other methods in the APL metric with a score of 74.2%, demonstrating exceptional performance in detecting large objects. This indicates that NAN-DETR's decoder-based multi-anchor strategy and centralization noising mechanism are highly effective for tasks requiring precise detection of larger objects.

These results demonstrate that both Co-Deformable-DETR and NAN-DETR excel in different aspects of object detection. Co-Deformable-DETR leads in medium-sized object detection, while NAN-DETR stands out in large object detection. The integration of the CIoU loss in NAN-DETR also enhances bounding box prediction accuracy, making both models valuable tools for a wide range of object detection scenarios.

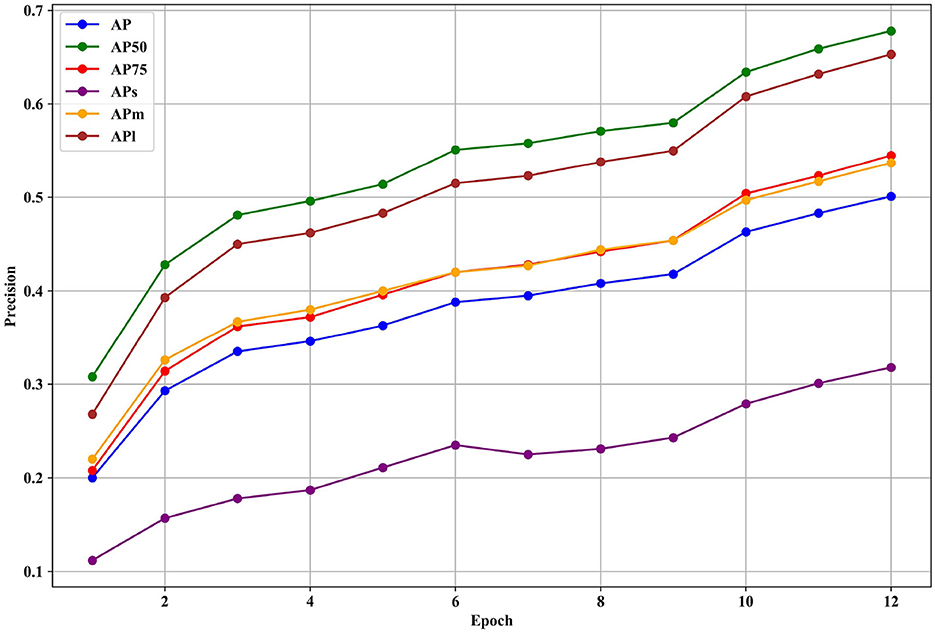

4.3.3 Overall analysisFigure 7 presents the various precision metrics of the NAN-DETR model on the COCO dataset, specifically focusing on the effects of the CIoU loss and Multi-noising anchors. The figure illustrates how the NAN-DETR model performs in terms of Average Precision (AP) across different IoU thresholds and object sizes after being trained for 12 epochs using the ResNet-50 backbone.

Figure 7. The various precision of NAN-DETR with CIoU and multi-noising anchors on COCO dataset. These detectors are trained with ResNet-50 backbones for 12 epochs.

Analysis of Performance:

• AP (average precision) improvement: the NAN-DETR model with the CIoU loss and multi-noising anchors achieves an AP of 50.1%, which is a significant improvement over other configurations, including the baseline NAN-DETR with single anchors and no CIoU. This suggests that the combination of CIoU and multi-noising anchors contributes to more accurate detection, especially for bounding box predictions.

• Small object detection: while the overall performance shows improvement, the precision for small objects (APS) sees a slight decrease in the model with multi-noising anchors. The APS drops from 32.5% in the single-anchor configuration to 31.8% with multi-noising anchors. This could indicate that the centralization noising mechanism, while beneficial overall, may introduce challenges in detecting smaller objects where precise bounding box predictions are critical.

• Medium and large object detection: the model demonstrates robustness in detecting medium and large objects, with APM (AP for medium objects) and APL (AP for large objects) improving to 53.7% and 65.3%, respectively. The consistent improvement in these metrics highlights the effectiveness of th

Comments (0)