where α represents the number of corresponding permutations, and α is a polynomial coefficient with additional constraints r1,r2,,…,rc≠0 . This tensor construction method maximally preserves the original hyperedge structure, further reflecting the associative patterns between different nodes in the hypergraph. To better understand this process, we provide an illustrative example:

Example 4.1. For a given hyperedge e1′= , to construct a 2-order adjacency tensor, we need to consider all permutations of length 2 for the node v1,v2,v3 . This means that hyperedge e1′ needs to choose 3 nodes from 2 positions for permutation, which will result in one of the 3 nodes being discarded, yielding ,, . Then, calculate the adjacency tensor coefficient as 23 , where the numerator is the cardinality of hyperedge e1′ , and the denominator is the number of permutations in this case.

4.2 Graph contrastive learningGraph contrastive learning aims to extract effective node features by comparing the feature differences between the original graph and the tensorized hypergraph. To comprehensively understand the data from different perspectives, we employ GCN for encoding learning on the original graph G . This process maps node features from a high-dimensional space to a low-dimensional feature space denoted as RN×k , resulting in the node feature vector Z1 under the original graph, formulated as Eq. (5),

Z1=σD̂−12⋅Â⋅D̂−12⋅X⋅W, (5)where A^=A+I denotes the adjacency matrix with self-loops, D^ij=∑j=0A^ij represents the diagonal matrix, σ⋅ signifies the non-linear transformation function, and W corresponds to the learnable weight matrix. Additionally, alignment between node features and adjacency tensor is achieved through a learnable weight matrix. The outer product pooling technique is then employed on the adjacency tensor to perform tensor convolution on G′ , facilitating information aggregation, formulated as Eq. (6),

Z2=σT̂⋅X⋅Θ, (6)where σ⋅ represents the non-linear transformation function, T̂ signifies the insertion of a self-loop matrix into the adjacency tensor, Enhancing the model’s focus on node-specific information helps in learning more comprehensive node representations, T̂=T+∑j,j≠iφiξij , ξ is defined as 1 when i=j , otherwise it is 0, and Z2 denotes the tensor perspective of node representation information. To minimize the similarity between positive samples and maximize the similarity between negative samples, a contrastive loss function is employed to enhance the discriminative power of node embeddings. For embeddings of the same node in two different views, we treat the same node from different views as positive samples and consider all other nodes as negative samples. Furthermore, we optimize the positive sample pairs (z1,i,z2,i) in a pairwise manner, formulated as Eq. (7),

Lz1,i,z2,i=logeθz1,i,z2,i/τeθz1,i,z2,i/τ+∑k=1N1k≠ieθz1,i,z2,i/τ, (7)where τ is a temperature parameter used to measure and adjust the distribution of similarities between samples in L(z1,i,z2,i) . 1[k≠i]∈ is an indicator function, taking the value 1 only when k=i . Considering the symmetry between views, we employ a symmetry loss function to reflect the symmetric features of node embeddings between the two views. Ultimately, our loss function L(z1,i,z2,i) is formulated as Eq. (8),

L=12N∑i=1N(L(z1,i,z2,i)+L(z2,i,z1,i)), (8) 5 ExperimentsIn this section, we first introduce the datasets utilized in our experiments. Subsequently, we compare our method with baseline approaches and conduct relevant ablation experiments. Finally, we perform additional experiments to further validate the superiority of the proposed method presented in this paper.

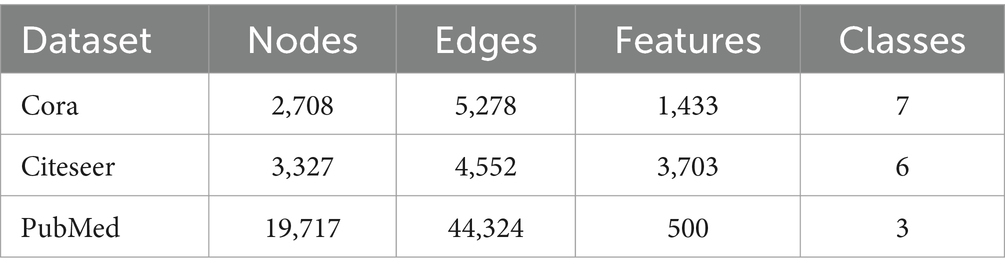

5.1 DatasetsTo validate the effectiveness of TP-GCL, we designed two sets of experiments, namely node classification tasks and graph classification tasks. The details of the datasets are provided in Table 2.

Table 2. Statistics of datasets used in experiments.

Our aim is to comprehensively evaluate the performance of the TP-GCL model in node classification. Node classification tasks focus on categorizing nodes with different features and labels. The datasets Cora, Citeseer, and PubMed belong to the academic network domain, where nodes represent papers, and edges represent citation relationships between papers. By utilizing these datasets, we validate the effectiveness and generalization capability of the TP-GCL method on graph data of various sizes and scales.

5.2 BaselinesThe baseline models for node classification tasks can be categorized into two groups. The first group includes semi-supervised learning methods such as ChebNet (Tang et al., 2019), GCN (Kipf and Welling, 2016), GAT (Veličković et al., 2017), GraphSAGE (Hamilton et al., 2017), which utilize node labels during the learning process. The second group comprises self-supervised methods, including DGI (Veličković et al., 2018), GMI (Peng et al., 2020), MVGRL (Hassani and Khasahmadi, 2020), GraphCL (You et al., 2020), GraphMAE (Hou et al., 2022), H-GCL (Zhu et al., 2023), United States-GCL (Zhao et al., 2023) which do not rely on node labels. The proposed TP-GCL in this paper also falls into the category of self-supervised graph contrastive learning methods.

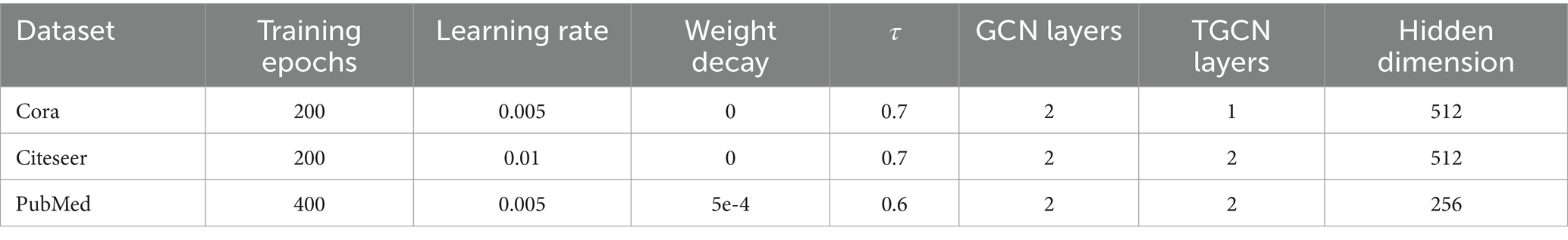

5.3 Experiment implementation detailsDuring the experimental process, we utilized the NVIDIA A40 GPU, equipped with 48GB of VRAM and 80GB of CPU memory. The deployment of TP-GCL was supported by PyTorch 1.12.1, PyTorch Geometric, and the PyGCL library. The code for our experiments will be made publicly available in upcoming work. For specific optimal parameter settings, please refer to Table 3. As shown in the table, Training epochs indicates the total number of epochs required for training, Learning rate controls the step size of model parameter updates, Weight decay is a regularization coefficient used to prevent overfitting, τ is the temperature coefficient used to set the focus on hard negative samples during contrastive learning, and Hidden dimension determines the size of the hidden layer, affecting the complexity and expressive power of feature learning.

Table 3. Detailed parameter setting.

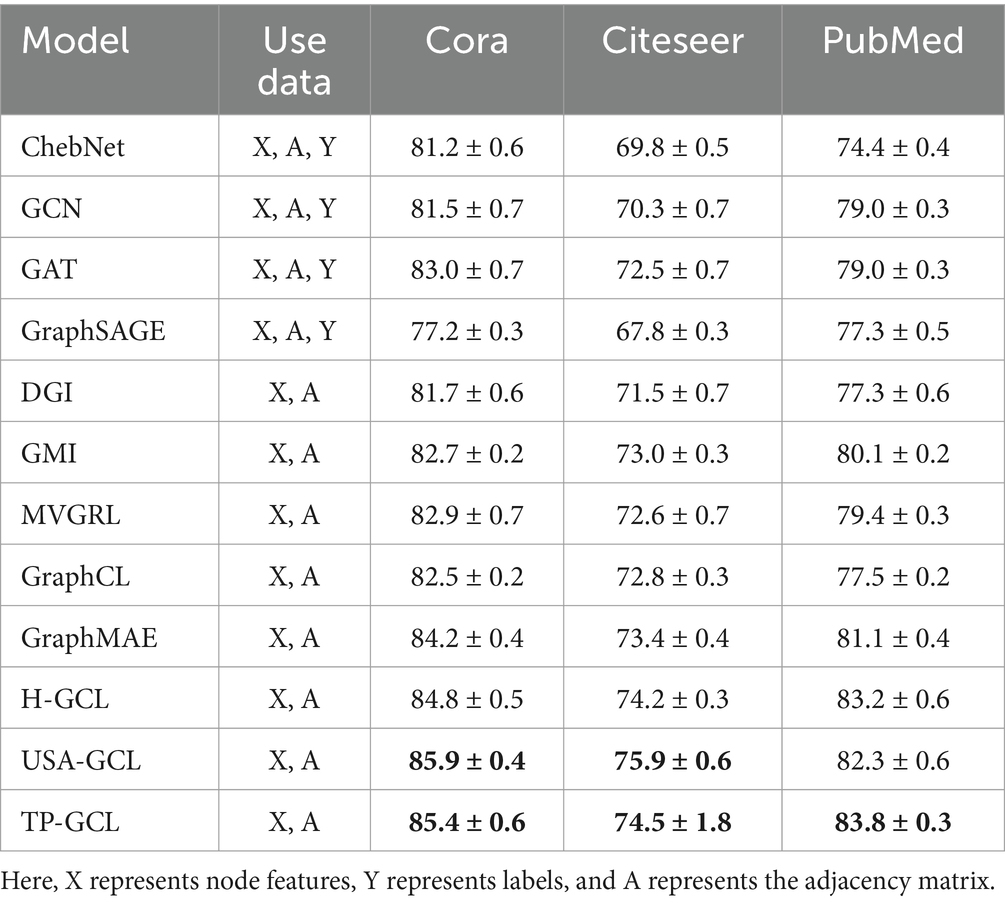

5.4 Experimental resultsWe validated the effectiveness of TP-GCL on node classification tasks, and Table 4 presents the performance comparison on the Cora, Citeseer, and Pubmed datasets.

Table 4. The performance of accuracy on node classification tasks.

The results in Table 4 clearly demonstrate the superior performance of TP-GCL in node classification tasks. TP-GCL exhibits high accuracy on three different datasets, Cora, Citeseer, and PubMed, surpassing other baseline models. This can be attributed to several advantages:

1. TP-GCL comprehensively captures the structural features of graphs in complex spaces using high-order adjacency tensors. Compared to traditional methods, high-order tensor representations provide richer information, facilitating a better understanding of both local and global structures in the graph. This allows TP-GCL to more accurately learn abstract representations of nodes.

2. Through the contrastive learning mechanism of anchor graph-tensorized hypergraphs, TP-GCL sensitively learns subtle differences and similarities between nodes. This learning approach makes TP-GCL more discriminative, enabling accurate differentiation of nodes from different categories.

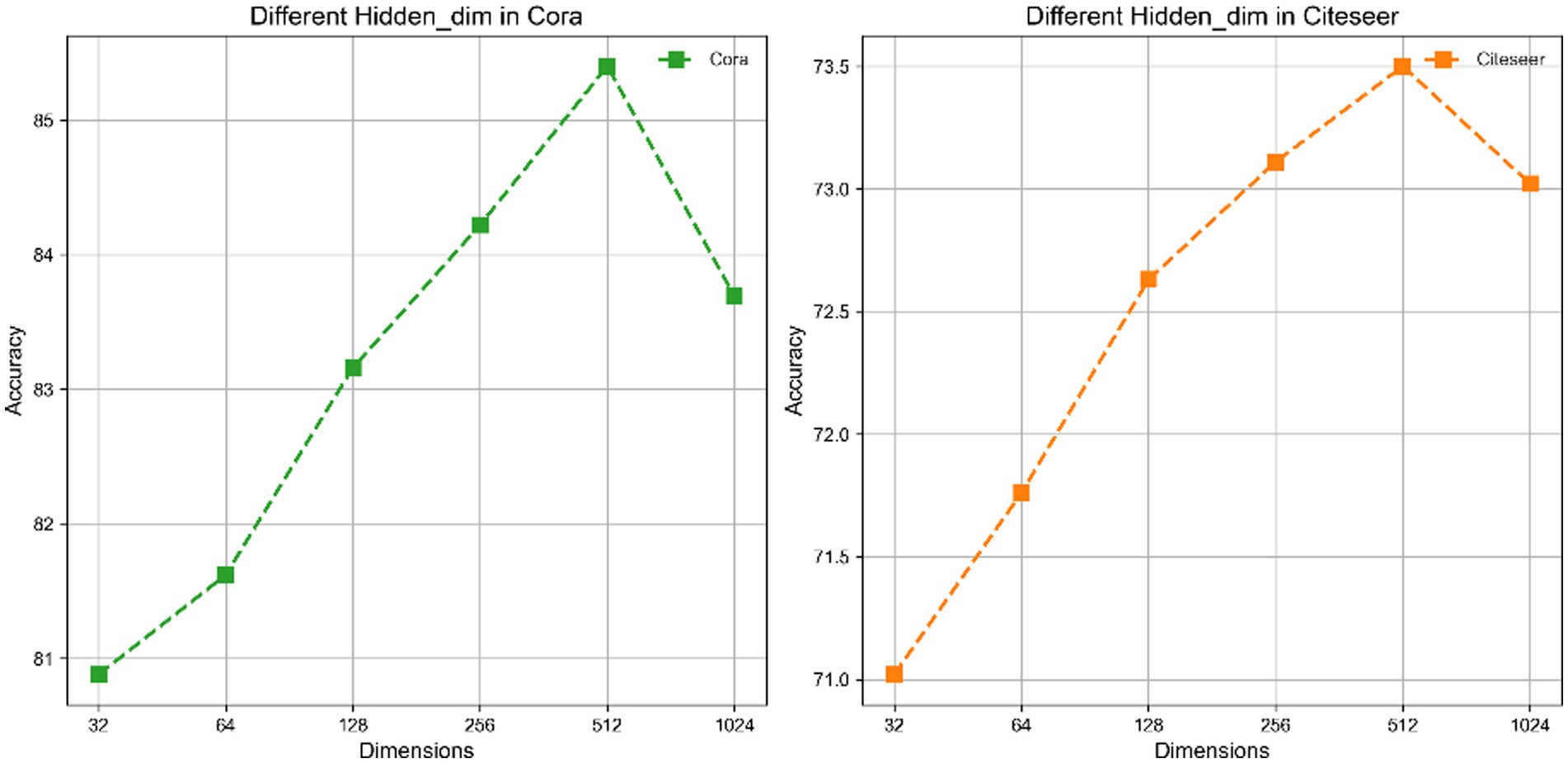

5.5 Hyperparametric sensitivityOur research focuses on an in-depth analysis of key hyperparameters such as hidden layer dimension, Tau value, and learning rate. Firstly, the hidden layer dimension plays a crucial role in the performance of TP-GCL. By adjusting the dimension of the hidden layer, we explored the impact of different dimensions on the model’s performance on the Cora and Citeseer datasets. As shown in Figure 2, the results indicate that increasing the dimension of the hidden layer within the range of [32 ~ 512] enhances the fitting capability of TP-GCL, with the optimal performance reached when the dimension equals 512. This is because a higher-dimensional hidden layer helps capture more complex data patterns. However, excessively high dimensions, such as 1,024, can lead to overfitting.

Figure 2. Performance of hidden layer dimension on Cora and Citeseer.

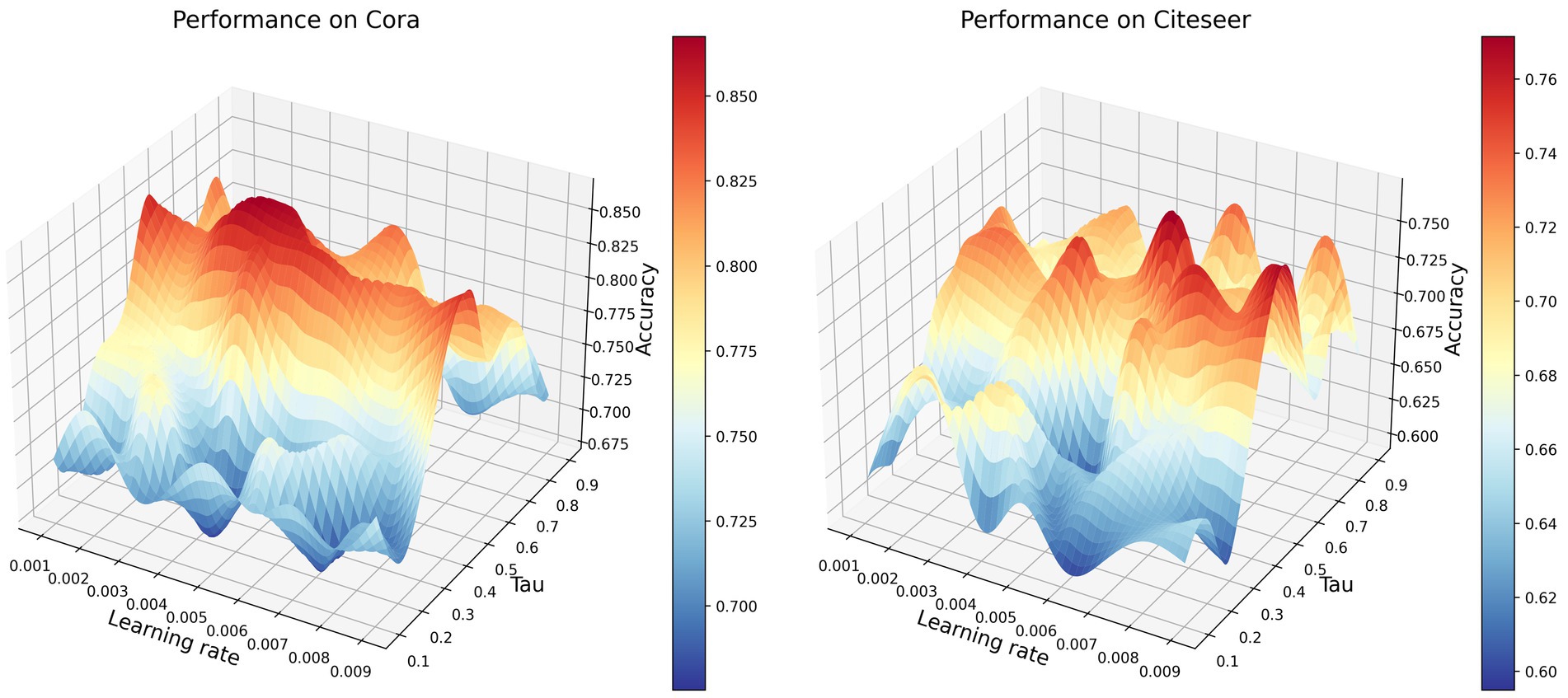

Next, we focused on the hyperparameters Tau and learning rate. Tau is typically used to control the smoothness of the distribution of similarities in contrastive learning, while the learning rate is used to regulate the speed of model parameter updates during training. We plotted the parameter space with the x-axis representing the learning rate in the range [0.001 ~ 0.009], the y-axis representing Tau in the range [0.1 ~ 0.9], and the z-axis representing the accuracy of node classification, as shown in Figure 3.

Figure 3. Performance of different learning rates and tau values on the Cora and Citeseer datasets.

From Figure 3, it can be observed that the variation in accuracy is influenced by changes in the learning rate under different Tau values. When Tau values are low (0.1–0.3), combinations within the learning rate range of 0.001–0.004 generally result in lower accuracy. This might be attributed to the slower parameter update speed caused by the lower learning rates in this range, preventing the model from fully utilizing information in the dataset and thereby hindering accurate node differentiation. Additionally, lower Tau values imply more sensitivity in similarity calculations, potentially causing similarity to concentrate too much between nodes, making effective node discrimination challenging and consequently reducing accuracy. On the other hand, when Tau values are high (0.6–0.9), combinations within the learning rate range of 0.008–0.009 exhibit relatively higher accuracy. This is possibly due to the higher learning rates in this range accelerating the model’s parameter update speed, aiding the model in better learning the dataset’s features. Furthermore, higher Tau values smooth out the similarity distribution, reducing the model’s sensitivity to noise and subtle differences in the data, allowing the model to better discriminate between nodes and thereby improving accuracy.

6 ConclusionIn response to the challenges posed by existing graph neural network methods in capturing global dependencies and diverse representations, as well as the difficulty in fully revealing the inherent complexity of graph data, this paper proposes a novel tensor-perspective graph contrastive learning method, TP-GCL. The aim is to comprehensively and deeply understand the structure of graphs and the relationships between nodes. Firstly, TP-GCL transforms graphs into tensorized hypergraphs, introducing higher-order information representation while preserving the original topological structure of the graph. This addresses the limitations of existing methods in capturing the complex structure of graphs and relationships between nodes. Subsequently, in TP-GCL, we delve into the differences and similarities between anchor graphs and tensorized hypergraphs to enhance the model’s sensitivity to global information in the graph. Experimental results on public datasets demonstrate a comprehensive evaluation of TP-GCL’s performance, validating its outstanding performance in the analysis of complex graph structures.

Data availability statementThe original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author contributionsML: Formal analysis, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. LM: Formal analysis, Methodology, Writing – original draft, Writing – review & editing. ZY: Conceptualization, Supervision, Validation, Writing – review & editing. YY: Formal analysis, Methodology, Software, Writing – review & editing. SC: Formal analysis, Methodology, Writing – review & editing. YX: Data curation, Validation, Writing – review & editing. HZ: Conceptualization, Funding acquisition, Supervision, Writing – review & editing.

FundingThe author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work is partially supported by the Construction of Innovation Platform Program of Qinghai Province of China under Grant no.2022-ZJ-T02. ML and LM have contributed equally to this work and should be regarded as co-first authors.

Conflict of interestThe authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s noteAll claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

ReferencesCai, L., Li, J., Wang, J., and Ji, S. (2020). Line graph neural networks for link prediction. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2021, 5103–5113.

Feng, G., Wang, H., and Wang, C. (2023). Search for deep graph neural networks. Inf. Sci. 649:119617. doi: 10.1016/j.ins.2023.119617

Crossref Full Text | Google Scholar

Gao, C., Zheng, Y., Li, N., Li, Y., Qin, Y., Piao, J., et al. (2023). A survey of graph neural networks for recommender systems: challenges, methods, and directions. ACM Trans. Recomm. Syst. 1, 1–51. doi: 10.1145/3568022

Crossref Full Text | Google Scholar

Hamilton, W., Ying, Z., and Leskovec, J. (2017). Inductive representation learning on large graphs. In: Proceedings of the advances in neural information processing systems, Long Beach, NJ: MIT, p. 30.

Hassani, K., and Khasahmadi, A H. (2020). Contrastive multi-view representation learning on graphs. In: Proceedings of the international conference on machine learning, Virtual, NJ: ACM, pp. 4116–4126.

Hou, Z., Liu, X., Cen, Y., Dong, Y., Yang, H., Wang, C., et al. (2022). Graphmae: “self-supervised masked graph autoencoders. In: Proceedings of the 28th ACM SIGKDD conference on knowledge discovery and data mining, New York, NJ: ACM, pp. 594–604.

Jin, D., Wang, R., Ge, M., He, D., Li, X., Lin, W., et al. (2022). Raw-gnn: random walk aggregation based graph neural network. arXiv 2022:13953. doi: 10.48550/arXiv.2206.13953

Crossref Full Text | Google Scholar

Kim, D., Baek, J., and Hwang, S. J. (2022). Graph self-supervised learning with accurate discrepancy learning. In: Proceedings of the Advances in neural information processing systems, New Orleans, No. 35, pp. 14085–14098.

Kipf, T. N., and Welling, M. (2016). Semi-supervised classification with graph convolutional networks. arXiv 2016:02907. doi: 10.48550/arXiv.1609.02907

Crossref Full Text | Google Scholar

Kumar, S., Mallik, A., Khetarpal, A., and Panda, B. S. (2022). Influence maximization in social networks using graph embedding and graph neural network. Inf. Sci. 607, 1617–1636. doi: 10.1016/j.ins.2022.06.075

Crossref Full Text | Google Scholar

Lin, X., Zhou, C., Wu, J., Yang, H., Wang, H., Cao, Y., et al. (2023). Exploratory adversarial attacks on graph neural networks for semi-supervised node classification. Pattern Recogn. 133:109042. doi: 10.1016/j.patcog.2022.109042

Crossref Full Text | Google Scholar

Liu, Y., Jin, M., Pan, S., Zhou, C., Zheng, Y., Xia, F., et al. (2022). Graph self-supervised learning: a survey. IEEE Trans. Knowl. Data Eng. 35, 1–5900. doi: 10.1109/TKDE.2022.3172903

Crossref Full Text | Google Scholar

Liu, X., Li, X., Fiumara, G., and de Meo, P. (2023). Link prediction approach combined graph neural network with capsule network. Expert Syst. Appl. 212:118737. doi: 10.1016/j.eswa.2022.118737

Crossref Full Text | Google Scholar

Liu, S., Meng, Z., Macdonald, C., and Ounis, I. (2023). Graph neural pre-training for recommendation with side information. ACM Trans. Inf. Syst. 41, 1–28. doi: 10.1145/3568953

Crossref Full Text | Google Scholar

Min, S., Gao, Z., Peng, J., Wang, L., Qin, K., and Fang, B. (2021). STGSN–a spatial–temporal graph neural network framework for time-evolving social networks. Knowl. Based Syst. 214:106746. doi: 10.1016/j.knosys.2021.106746

Crossref Full Text | Google Scholar

Peng, Z., Huang, W., Luo, M., Zheng, Q., Rong, Y., Xu, T., et al. (2020). Graph representation learning via graphical mutual information maximization. In: Proceedings of the web conference. Ljubljana, NJ: ACM, 259–270.

Sheng, Z., Zhang, T., Zhang, Y., and Gao, S. (2023). Enhanced graph neural network for session-based recommendation. Expert Syst. Appl. 213:118887. doi: 10.1016/j.eswa.2022.118887

Crossref Full Text | Google Scholar

Shi, S., Qiao, K., Yang, S., Wang, L., Chen, J., and Yan, B. (2021). Boosting-GNN: boosting algorithm for graph networks on imbalanced node classification[J]. Front. Neurorobot. 15:775688. doi: 10.3389/fnbot.2021.775688

PubMed Abstract | Crossref Full Text | Google Scholar

Shuai, J., Zhang, K., Wu, L., Sun, P., Hong, R., Wang, M., et al. (2022). A review-aware graph contrastive learning framework for recommendation. In: Proceedings of the 45th international ACM SIGIR conference on Research and Development in information retrieval. New York, NJ: ACM, pp. 1283–1293.

Tang, S., Li, B., and Yu, H. (2019). ChebNet: efficient and stable constructions of deep neural networks with rectified power units using chebyshev approximations. arXiv 2019:5467. doi: 10.48550/arXiv.1911.05467

Crossref Full Text | Google Scholar

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Bengio, Y., and Liò, P. (2017). Graph attention networks. arXiv 2017:10903. doi: 10.48550/arXiv.1710.10903

Crossref Full Text | Google Scholar

Veličković, P., Fedus, W., Hamilton, W. L., Liò, P., Bengio, Y., Hjelm, R. D., et al. (2018). Deep graph infomax. arXiv 2018:10341. doi: 10.48550/arXiv.1809.10341

Crossref Full Text | Google Scholar

Wang, K., Zhou, R., Tang, J., and Li, M. (2023). GraphscoreDTA: optimized graph neural network for protein–ligand binding affinity prediction. Bioinformatics 39:btad340. doi: 10.1093/bioinformatics/btad340

PubMed Abstract | Crossref Full Text | Google Scholar

Wei, X., Liu, Y., Sun, J., Jiang, Y., Tang, Q., and Yuan, K. (2023). Dual subgraph-based graph neural network for friendship prediction in location-based social networks. ACM Trans. Knowl. Discov. Data 17, 1–28. doi: 10.1145/3554981

Crossref Full Text | Google Scholar

Wu, L., Lin, H., Tan, C., Gao, Z., and Li, S. Z. (2021). Self-supervised learning on graphs: contrastive, generative, or predictive. IEEE Trans. Knowl. Data Eng. 35, 4216–4235. doi: 10.1109/TKDE.2021.3131584

Crossref Full Text | Google Scholar

Xu, S., Liu, X., Ma, K., Dong, F., Riskhan, B., Xiang, S., et al. (2023). Rumor detection on social media using hierarchically aggregated feature via graph neural networks. Appl. Intell. 53, 3136–3149. doi: 10.1007/s10489-022-03592-3

PubMed Abstract | Crossref Full Text | Google Scholar

Yang, L., Zhou, W., Peng, W., Niu, B., Gu, J., Wang, C., et al. (2022). Graph neural networks beyond compromise between attribute and topology. In: Proceedings of the ACM web conference, New York, NJ: ACM, pp. 1127–1135.

Ye, W., Askarisichani, O., Jones, A., and Singh, A. (2020). Learning deep graph representations via convolutional neural networks. IEEE Transactio ns on Knowledge and Data Engineering, 34, pp. 2268–2279.

You, Y., Chen, T., Sui, Y., Chen, T., Wang, Z., and Shen, Y. (2020). Graph contrastive learning with augmentations. In: Proceedings of the advances in neural information processing systems, Ljubljana, NJ: ACM, pp. 5812–5823.

Zhao, X., Dai, Q., Wu, J., Peng, H., Liu, M., Bai, X., et al. (2022). Multi-view tensor graph neural networks through reinforced aggregation. IEEE Trans. Knowl. Data Eng. 35, 4077–4091. doi: 10.1109/TKDE.2022.3142179

Crossref Full Text | Google Scholar

Zhao, L., Qi, X., Chen, Y., Qiao, Y., Bu, D., Wu, Y., et al. (2023). Biological knowledge graph-guided investigation of immune therapy response in cancer with graph neural network. Brief. Bioinform. 24:bbad023. doi: 10.1093/bib/bbad023

PubMed Abstract | Crossref Full Text | Google Scholar

Zhao, H., Yang, X., Deng, C., and Tao, D. (2023). Unsupervised structure-adaptive graph contrastive learning. IEEE Trans. Neural Netw. Learn. Syst. 1, 1–14. doi: 10.1109/TNNLS.2023.3341841

Crossref Full Text | Google Scholar

Zhou, P., Wu, Z., Wen, G., Tang, K., and Ma, J. (2023). Multi-scale graph classification with shared graph neural network. World Wide Web 26, 949–966. doi: 10.1007/s11280-022-01070-x

Crossref Full Text | Google Scholar

Zhu, Y., Xu, Y., Yu, F., Liu, Q., Wu, S., and Wang, L. (2021). Graph contrastive learning with adaptive augmentation. In: Proceedings of the ACM web conference. Ljubljana, NJ: ACM, pp. 2069–2080.

Zhu, J., Zeng, W., Zhang, J., Tang, J., and Zhao, X. (2023). Cross-view graph contrastive learning with hypergraph. Inf. Fusion 99:101867. doi: 10.1016/j.inffus.2023.101867

Crossref Full Text | Google Scholar

Zou, M., Gan, Z., Cao, R., Guan, C., and Leng, S. (2023). Similarity-navigated graph neural networks for node classification. Inf. Sci. 633, 41–69. doi: 10.1016/j.ins.2023.03.057

Comments (0)