Analyzing cytosolic calcium oscillations helps decode neuronal firing patterns, synaptic activity, and network dynamics, offering insights into cell activity and states (Del Negro, 2005; Dombeck et al., 2007; Grienberger and Konnerth, 2012; Forsberg et al., 2017). Furthermore, the changes in calcium activity may be indicative of cell responses to downregulation of molecular pathways, epigenetic alterations, or even the effect of treatments or drugs, and disease states (Zhang et al., 2010; Huang et al., 2013; Robil et al., 2015; Britti et al., 2020; Lines et al., 2020; Miller et al., 2023). This makes dynamic calcium activity recordings a crucial tool to investigate physiology and neurological disorders and to design and develop therapeutic interventions.

While we have seen major development in recent years in the imaging tools available to study astrocytes, the software side has been slow to catch up (Stobart et al., 2018a; Aryal et al., 2022; Gorzo and Gordon, 2022). Currently several software packages are available for researchers to analyze calcium activity recordings from brain cells (Table 1). However, most of these packages are primarily developed for neurons, and are often not suitable for astrocytes. The challenge stems from astrocytes' unique physiology, marked by rapid microdomain calcium fluctuations (Stobart et al., 2018b; Curreli et al., 2022) and their ability to alter the morphology during a single recording (Baorto et al., 1992; Anders et al., 2024). Astrocytes exhibit calcium fluctuations that are spatially and temporally diverse, reflecting their integration of a wide range of physiological signals (Smedler and Uhlén, 2014; Bazargani and Attwell, 2016; Papouin et al., 2017; Denizot et al., 2019; Semyanov et al., 2020).

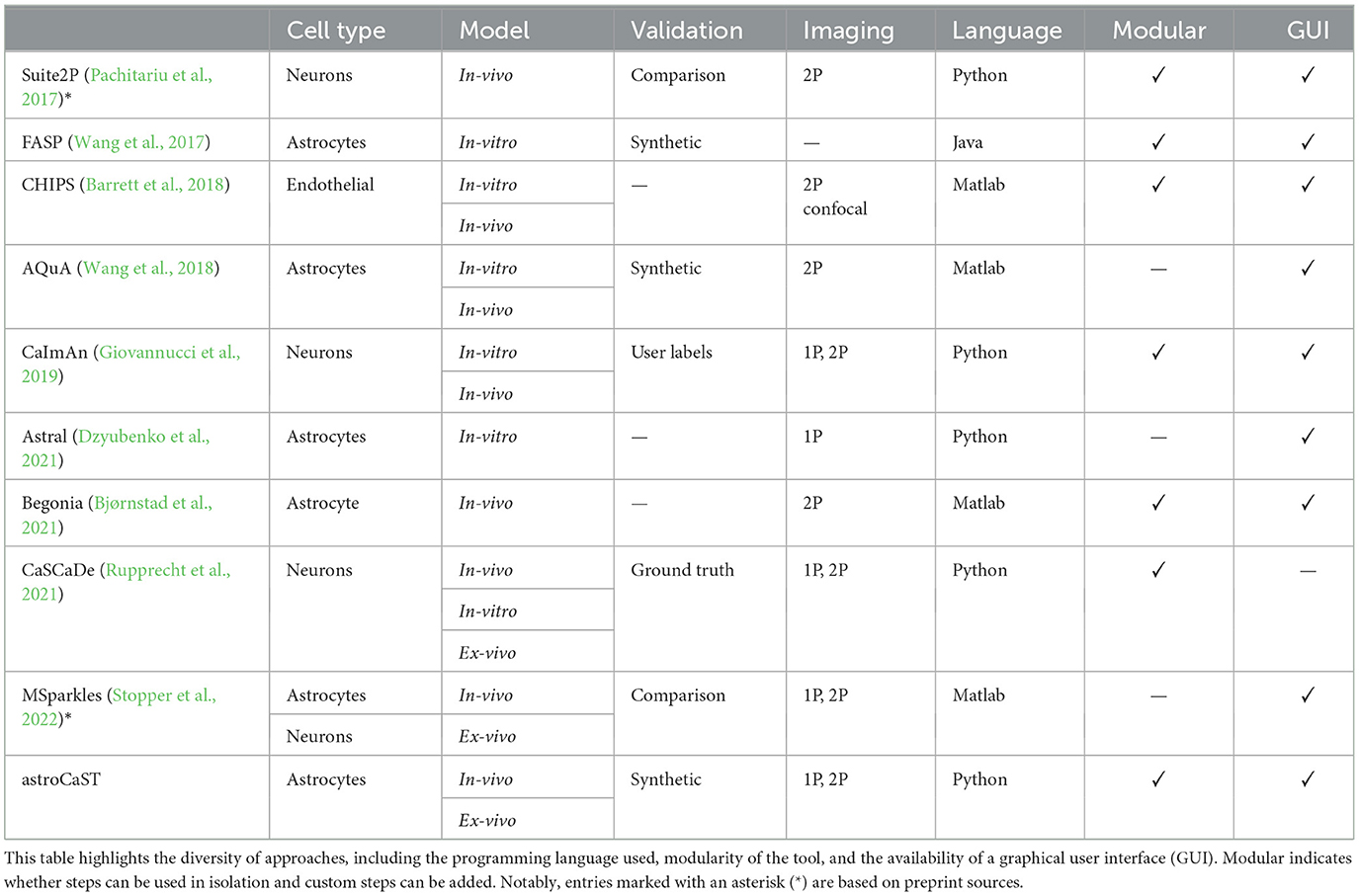

Table 1. Comparison of computational tools for analyzing cellular calcium oscillations.

The available toolkits specifically transcribing astrocytic activity come with several shortcomings (Table 1). A major barrier is the use of proprietary programming languages like Matlab, that hinder widespread use. Additionally, slow implementations due to lack of parallelization and Graphics Processing Unit (GPU) acceleration or usage of RAM exceeding most standard setups impedes analysis of long videos (>5,000 frames). While all compared toolkits offer efficient event detection, most lack support to gain insight from the extracted events.

AstroCAST, developed in Python for its versatility and ease of use, addresses these shortcomings through a modular design, allowing for customizable pipelines and parallel processing. It optimizes resource use by only loading data as needed, ensuring efficient hardware scaling. Its modular design enables stepwise quality control and flexible customization. Finally, astroCaST includes dedicated modules to analyze common research questions, as compared to other packages that primarily focus on event detection.

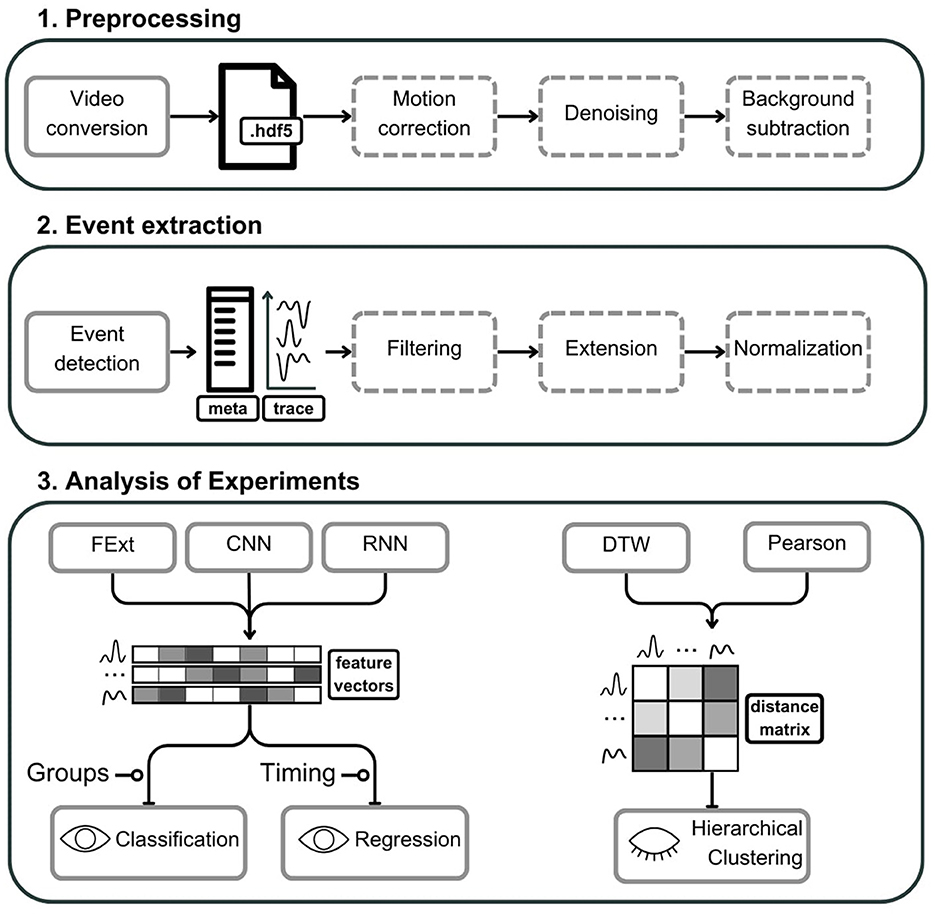

Here, we not only introduce astroCaST but also provide a detailed guide for extracting astrocytic calcium signals from video data, performing advanced clustering and correlating their activity with other physiological signals (Figure 1). AstroCAST extends beyond theory, having been tested with synthetic data, as well as in-vitro, ex-vivo, and in-vivo recordings (Table 3). This underscores AstroCAST's ability to harness sophisticated computational techniques, making significant strides in the study of astrocytes and offering new opportunities for neuroscience research.

Figure 1. Schematic representation of the astroCaST toolkit comprising three major analytical phases for processing and analyzing astrocytic activity data. (1) In the preprocessing phase, video input is converted into an HDF5 file format, with optional stages for motion artifact correction, noise reduction, and background subtraction. (2) The event extraction phase involves the identification of astrocytic events, generating corresponding event traces and metadata. Events can subsequently undergo optional filtering, frame extension, and signal normalization. (3) The analysis of experiments is two-fold. For classification and regression, users may select from various feature vector embeddings including Feature Extraction (FExt), Convolutional Neural Network (CNN), and Recurrent Neural Network (RNN). For hierarchical clustering, the toolkit offers Pearson correlation and Dynamic Time Warping (DTW) for the computation of event similarity, represented as a distance matrix. Dashed outlines denote optional steps within the process. The presence of an open eye icon signifies supervised steps, while a closed eye indicates unsupervised steps. A line terminating in a circle denotes need for additional user input.

2 Methods 2.1 RequirementsIt is imperative that the recordings specifically capture astrocytes labeled with calcium sensors, either through transgenic models or viral vectors. While the use of calcium dyes is possible, their application may not guarantee the exclusive detection of astrocytic events. The initial step of the astroCaST toolkit involves preparing the video recordings for analysis. Our protocol supports a range of file formats, including .avi, .h5, .tiff (either single or multipage), and .czi, accommodating videos with interleaved recording paradigms. To ensure reliable results, recordings should be captured at a frequency of at least 8 Hz for One-photon (1P) imaging and 1–2 Hz for Two-photon (2P) imaging. This frequency selection is crucial for capturing events of the expected duration effectively.

Regarding hardware requirements, our protocol is adaptable to a variety of configurations, from personal computers to cloud infrastructures, with certain modules benefiting from GPU acceleration. At a minimum, we recommend using hardware equipped with at least 1.6 GHz quad-core processor and 16 GB of RAM to efficiently handle the data analysis. In cases where the available memory is a bottleneck, astroCaST offers a lazy parameter to only load relevant sections of the data into memory. While this increases processing time, depending on the speed of the storage medium, it allows users to analyze datasets that would usually exceed the capabilities of their hardware.

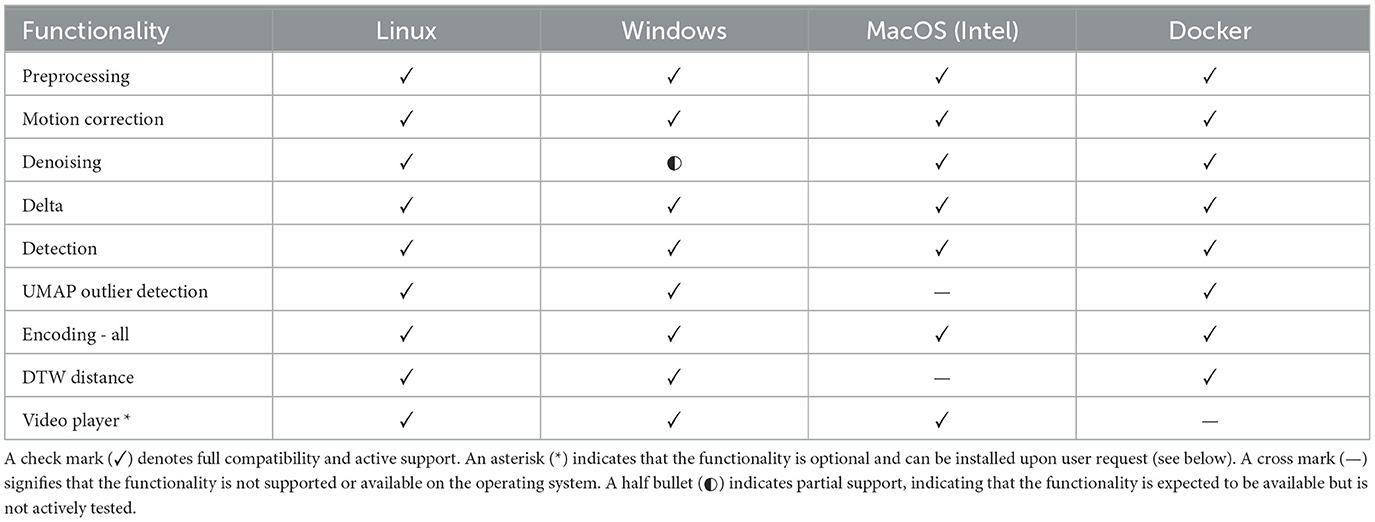

On the software side, we advocate for the use of the Linux operating system, specifically Ubuntu or AlmaLinux distributions, for optimal performance. However, Windows or macOS can be used with some functional limitations (Table 2). Be advised, that the M1 and M2 Mac processors are currently not supported. Essential software includes Python version 3.9, Anaconda or Miniconda, and git for version control. Furthermore, we recommend the use of ImageJ or an equivalent image viewer for analyzing the output visually. This comprehensive approach ensures that researchers can accurately extract and analyze astrocytic calcium signals, paving the way for further understanding of their physiological roles.

Table 2. Availability of different functionalities of astroCaST across operating systems.

2.2 InstallationTo run the software, astroCaST and its dependencies must be installed. There are multiple options to do this depending on how much control users would like to have over the installation. Of note, the following instructions install astroCaST with its full functionality. If that is not desired or possible remove the -E all or [all] flags.

2.2.1 Creating a conda environment (optional)While not strictly necessary, we highly recommend to create a fresh anaconda environment. This prevents common installation errors and conflicts with existing environments.

> conda create -n astro python=3.9 poetry

> conda activate astro

2.2.2 Install from source (recommended)> cd ''/path/to/directory/''

> git clone git@github.com:janreising/astroCAST.git

> cd astroCAST

> poetry install -E all

2.2.3 Installation with pip (easiest)# install core features

> pip install astrocast

# install with all features

> pip install astrocast[all]

2.2.4 Container installation (last resort)To install docker and create an account, follow the instructions on the docker webpage: https://docs.docker.com/engine/install/

> docker pull anacgon/astrocast:latest

> docker image ls

> docker run -v /path/to/your/data:/home/data -it

-p 8888:8888 astrocast:latest

# Optionally, start jupyter notebook from inside

the docker container:

> jupyter-lab --allow-root --no-browser

--port=8888 --ip=''*''

2.2.5 Test installationBoth commands should run without any errors.

> python -c ''import⌴astrocast''

> astrocast --help

2.3 Animal modelsOur research utilized two transgenice mouse strains whose background was changed to outbred CD-1 mice supplied by Charles River Laboratories, located in Germany. The initial transgenic lines were Aldh1l1-Cre (JAX Stock No. 029655: B6N.FVB-Tg(Aldh1l1-cre/ERT2)1Khakh/J) and Ai148D mice (Jax Stock No. 030328: B6.Cg-Igs7tm148.1(tetO-GCaMP6f,CAG-tTA2)Hze/J). These strains were housed in a controlled environment featuring a cyclical light-dark period lasting 12 hours each. Unlimited access to food and water was ensured. These mice were housed at the Department of Comparative Medicine at Karolinska Institutet in Stockholm, Sweden. Our experimental protocols were in strict compliance with the European Community Guidelines and received authorization from the Stockholm Animal Research Ethics Committee (approval no. 15819-2017). Acute slices from the pre-Bötzinger to the protocol previously established by Reising et al. (2022).

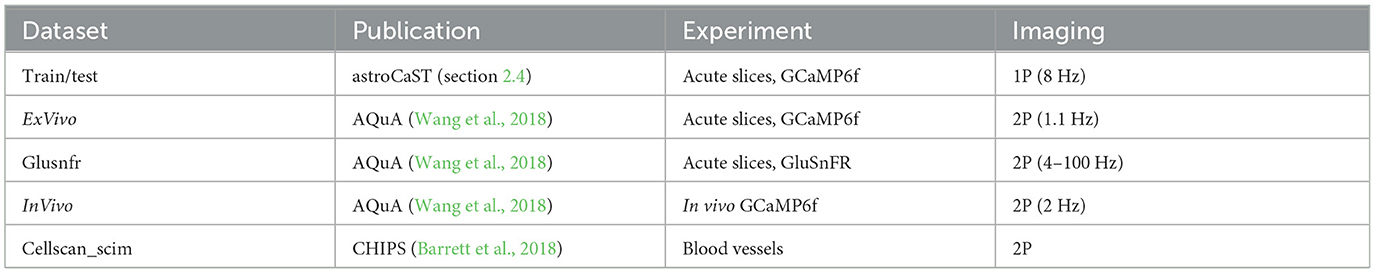

2.4 Datasets used during developmentAstroCAST was developed using publicly available datasets and our own data. The data represents astrocytes studied ex-vivo, in-vivo and in acute slices captured with 1P or 2P (Table 3). To ensure transparency and provide a practical starting point, we offer a collection of tested, default settings within a YAML file. This file, designed to represent a first-approach configuration, is accessible in our GitHub repository. Additionally, we provide the datasets used in this manuscript, as well as pretrained models. A curated list of pretrained models is accessible on our GitHub page under denoiser models, and a comprehensive collection of models can be downloaded via astroCaST. The file name of the model summarizes the model and training parameters.

# download public and custom datasets

> astrocast download-datasets

''/path/to/download/directory''

# download pretrained models

> astrocast download-models

''/path/to/download/directory''

Table 3. Overview of datasets employed in analysis.

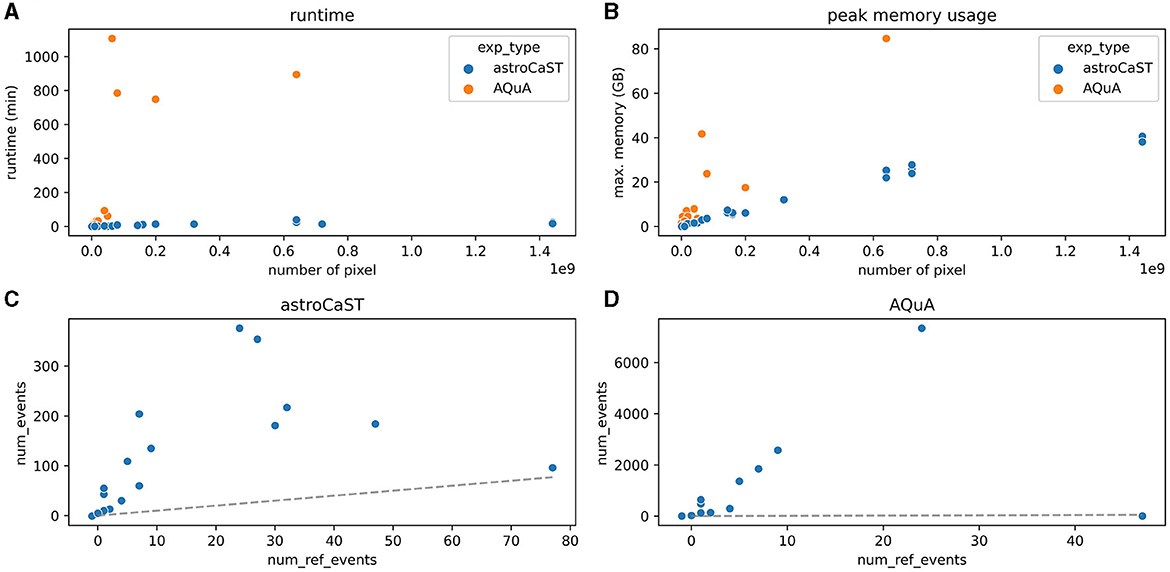

2.5 Benchmarking of astroCaST and AQuAA novel synthetic video dataset was designed to evaluate the performance of both astroCaST and AQuA. This dataset was specifically developed following the implementation of astroCaST, and was not used for the optimization of astroCaST. The choice of a synthetic dataset is justified due to the lack of suitable publicly accessible datasets with verified ground truth labels. Both algorithms were run with their default settings, astroCaST with settings from config.yaml and AQuA with preset 2 (ex-vivo), to ensure unbiased performance comparison. The computational analysis was conducted on the Rackham HPC cluster at UPPMAX, Uppsala University, employing 12 CPU cores (Intel Xeon V4), 76.8 GB of RAM, and 20 hours of allocated wall time. A detection was considered failed if it surpassed either the memory capacity or time constraints. Within these parameters, AQuA successfully processed video dimensions up to 5,000 × 800 × 800 pixels, whereas astroCaST managed videos up to 5,000 × 1,200 × 1,200 pixels. Events were filtered to lengths between 5 and 1,000 frames to eliminate evident anomalies.

3 Results 3.1 Using astroCaSTAstroCAST is designed to guide users through the entire process of astrocyte calcium analysis, from the initial raw data to in-depth analysis. It is a versatile toolkit that allows for a customizable workflow as users have the flexibility to omit certain steps or integrate additional analyses as their research demands. AstroCAST serves as a robust companion for computational exploration in neuroscience.

Central to the toolkit's utility is the astroCaST Python package, which offers direct access to all functionalities of the toolkit. This enables users to incorporate astroCaST seamlessly into their existing workflows or to automate processes within their custom scripts. The toolkit is structured into three major blocks: Preprocessing, Encoding, and Exploratory Analysis.

In the Preprocessing block, users can use a CLI, accessible via commands such as astrocast--help directly in the terminal. The CLI facilitates the use of a predefined configuration file to streamline the preprocessing tasks. Moreover, an intuitive “Argument Explorer” is incorporated to assist users in quickly testing different parameters, enabling export of the resultant configuration settings. For a hands-on introduction to this stage, we have provided a Jupyter notebook, allowing users to engage with the protocol interactively.

The Encoding and Analysis block adapts to the specific needs of individual experiments, catering to diverse research objectives such as comparing drug treatments, model systems, correlating activities with stimuli, or monitoring changes over time. This phase of the pipeline is supported by a dedicated GUI, which offers an interactive environment for analysis and enhanced data visualization capabilities.

Acknowledging the diversity in data analysis approaches within astroCaST, subsequent sections will provide concise guidance to interact with astroCaST and ensure that users can harness its full potential.

3.1.1 Jupyter notebooksJupyter notebooks can be used to interactively run the analysis and visualize the results. If you have cloned the repository (Section 2.2.2), an example notebook is included to follow along with the steps described here.

> cd /path/to/astroCAST/notebooks/examples/

> jupyter lab

# on MacOS the command might be

> jupyter-notebook

A browser window will open which displays the available examples or can be used to create custom notebooks.

3.1.2 The command line interface (CLI)The CLI is a useful selection of commands that enables users to perform common analysis steps directly from the terminal. Especially in the context of high-performance computing this way of interacting with astroCaST is convenient. All parameters can either be provided manually in the terminal or through a configuration YAML file. We recommend using a configuration file when preprocessing many videos to ensure that the results can be compared. The configuration file must adhere to the YAML format and can contain settings for more than one command. A default configuration file can be found in the GitHub repository.

# get list of all available commands

> astrocast --help

# get help for individual commands

> astrocast COMMAND --help

# use manual settings

> astrocast COMMAND --PARAM-1 VALUE1 [...]

# use a configuration file

> astrocast --config ‘/path/to/config' COMMAND

[...]

3.1.3 The graphical user interface (GUI)AstroCAST implements two GUIs, based on shiny (Chang et al., 2024), to simplify the selection of suitable parameters for the analysis. The commands below will either automatically open a browser page to the interface or provide a link that can be copied to a browser.

# Identify correct settings for the event detection

> astrocast explorer -- input path /path/to/file

-- h5-loc /dataset/name

# Explore detected events, including filtering,

embedding and experiments

> astrocast exploratory-analysis -- input path

/path/to/roi/ -- h5-loc /dataset/name

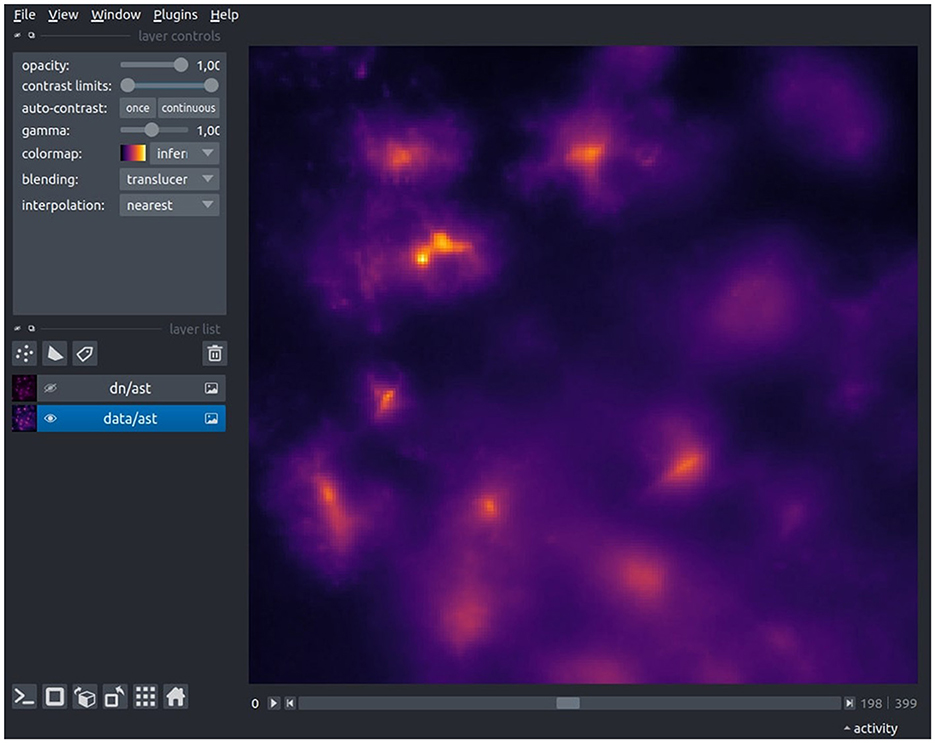

3.1.4 The data viewerFor convenience astroCaST includes a data viewer, based on napari (Ahlers et al., 2022), which allows for fast and memory efficient visualization of data. Users can default to their image viewer of choice, like ImageJ, if they choose to do so.

# view single dataset

> astrocast view-data --lazy False /path/to/file

/dataset/name

# view multiple datasets

> astrocast view-data /path/to/file /dataset/one

/dataset/two

# view results of detection

> astrocast view-detection-results --video-path

/path/to/video --loc /dataset/name /path/to/dir/

name.roi

3.2 Preprocessing 3.2.1 File conversionAstroCAST is designed to handle a wide range of common input formats, such as .tiff and .czi files, accommodating the diverse nature of imaging data in neuroscience research. One of the key features of astroCaST is its ability to process interleaved datasets. By specifying the –channels option, users can automatically split datasets based on imaging channels, which is particularly useful for experiments involving multiple channels interleaved, such as alternating wavelengths.

Moreover, astroCaST supports the subtraction of a static background from the video recordings using the –subtract-background option. This feature allows for the provision of a background image or value, which is then subtracted from the entire video. Subtracting for example the dark noise of the camera can significantly enhance the quality of the analysis.

For optimal processing and data management, it is recommended to convert files to the .h5 file format. The .h5 format benefits from smart chunking, enhancing the efficiency of data retrieval and storage. The output configuration can be finely tuned with options such as –h5-loc for specifying the dataset location within the .h5 file, –compression for selecting a compression algorithm (e.g., “gzip”), and –dtype for adjusting the data type if the input differs from the intended storage format.

Chunking is a critical aspect of data management in astroCaST, allowing for the video to be divided into discrete segments for individual saving and compression. The –chunks option enables users to define the chunk size, balancing between retrieval speed and storage efficiency. An appropriately sized chunk can significantly improve processing speed without compromising on efficiency. In cases where the optimal chunk size is uncertain, setting –chunks to None instructs astroCaST to automatically determine a suitable chunk size.

Lastly, the –output-path option directs astroCaST where to save the processed output. While the .h5 format is recommended for its efficiency, astroCaST also supports saving in formats such as .tiff, .tdb, and .avi, providing flexibility to accommodate various research needs and downstream analysis requirements.

# (optional) browse the available flags

> astrocast convert-input --help

# ‘--config' flag can be ommited to use default

settings

> astrocast --config ''/path/to/config''

convert-input ''/path/to/file/or/folder''

We recommend to verify the conversion through a quick visual inspection using the built-in data viewer (Figure 2) or an imaging software of your choice (e.g., ImageJ). During this check, ensure that the pixel values are within the expected range (int or float), the image dimensions (width and height) are as anticipated, all frames have been successfully loaded, de-interleaving (if applicable) has been executed correctly, and the dataset name is accurate.

Figure 2. The Astrocast Viewer interface, showcasing a video file of astrocytic calcium fluorescence captured within the inspiratory rhythm generator (preBötC) in the medulla. The original recording, acquired at a 20X magnification with a resolution of 1,200 × 1,200 pixels, was performed at a temporal resolution of 8 Hz. The TIFF-format video was subsequently converted to the HDF5 file format, with spatial downscaling by a factor of four in the XY plane, as a preparatory step for further preprocessing.

> astrocast view-data --h5-loc ''dataset/name''

''/path/to/your/output/file''

3.2.2 Motion correction (optional)During imaging, samples will drift or warp which means that the location of the astrocytes might change in the Field of View (FOV). Depending on the amount of movement, this can have detrimental consequences to the analysis. The motion correction module of the CaImAn package (Giovannucci et al., 2019), is used in the astroCaST protocol to correct these artifacts. We refer users to the the jnormcorre documentation for detailed information, but explain the commonly used parameters here.

A cornerstone of this feature is the ability to set the maximum allowed rigid shift through the –max-shifts parameter. This parameter is critical for accommodating sample motion, ensuring that shifts do not exceed half of the image dimensions, thus balancing between correction effectiveness and computational efficiency. The precision of motion correction is further refined using the –max-deviation-rigid option, which limits the deviation of each image patch from the frame's overall rigid shift. This ensures uniformity across the corrected image, enhancing the accuracy of the motion correction process. For iterative refinement of the motion correction, astroCaST employs the –niter-rig parameter, allowing up to three iterations by default. This iterative approach enables a more accurate adjustment to the motion correction algorithm, improving the quality of the processed images. To address non-uniform motion across the field of view, astroCaST offers the piecewise-rigid motion correction option, activated by setting –pw-rigid to True. This method provides a more nuanced correction by considering different motion patterns within different image segments, leading to superior correction outcomes. Furthermore, the –gsig-filt parameter anticipates the half-size of cells in pixels. This information aids in the filtering process, crucial for identifying and correcting motion artifacts accurately.

By carefully adjusting these parameters, researchers can significantly enhance the quality of their imaging data, ensuring that motion artifacts are minimized and that the resultant data is of the highest possible accuracy for subsequent analysis.

# ‘--config' flag can be ommited to use default

settings

> astrocast --config ''/path/to/config'' motion-

correction --h5-loc ''dataset/name''

''/path/to/file''

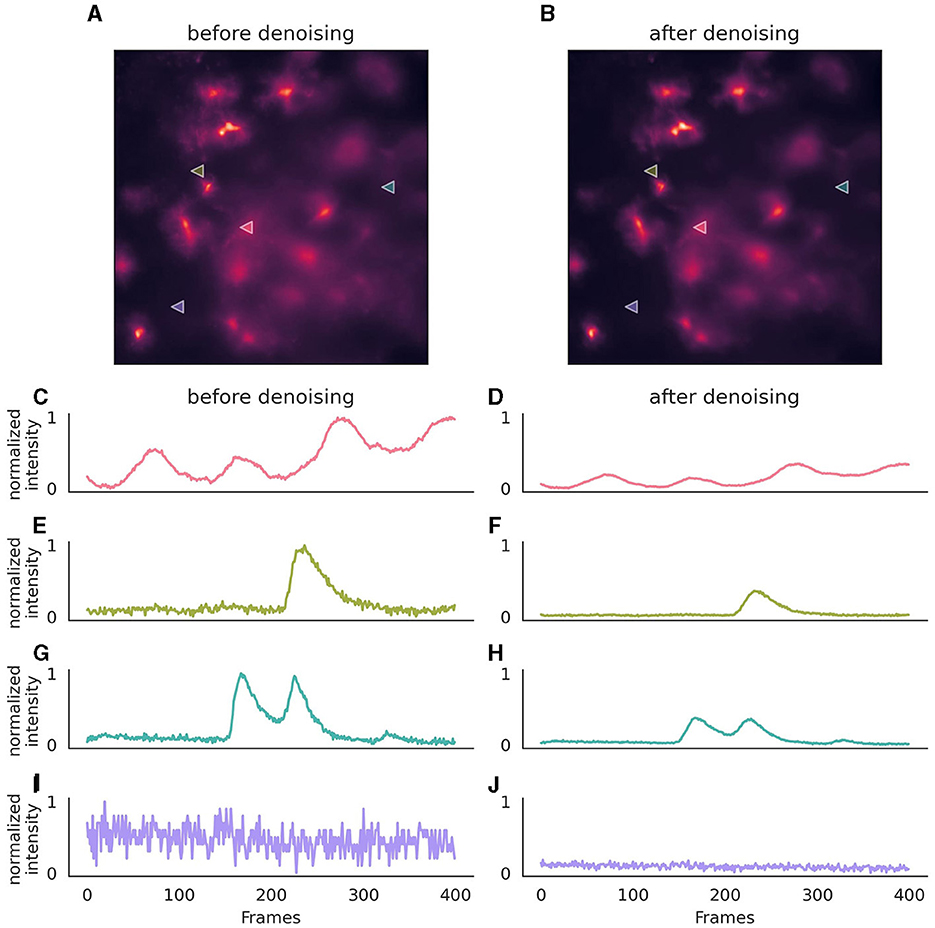

3.2.3 Denoising (optional)Denoising is an optional but often beneficial preprocessing step in image analysis. The denoising module is based on the architecture suggested for DeepInterpolation (Lecoq et al., 2021) and employs a Convolutional Neural Network (CNN) designed to clean a target frame. The CNN uses adjacent frames as a reference without exposing it to the target frame itself. This approach enables the network to interpolate the desired signal from the contextual frames. Since the noise is stochastic, the network inherently learns to disregard it, effectively isolating and enhancing the signal in the process. We have extended the original approach to support videos of varying dimensions and facilitate straightforward retraining protocols.

Training the denoising model is typically a singular task; once the model is trained, it can be applied to multiple datasets with no further adjustments. The duration of this initial training varies from 1 to 12 h, contingent on data intricacy and available computational power. Subsequent application of the model to new data, known as inference, is considerably more expedient, usually requiring minutes for each file. For convenience, we offer a suite of pre-trained models tailored to different imaging modalities (Section 2.4).

3.2.3.1 Training (optional)To ensure the robustness and reliability of the denoising process, it is crucial to carefully select the training dataset for the denoiser. The approach to training should aim to minimize the model's exposure to the data it will denoise, which can enhance the reliability of denoising and prevent overfitting.

One approach is to train the model on a separate data set that will not be used in subsequent analysis. This method is advantageous because it allows the model to learn from a diverse set of data, ideally encompassing all experimental conditions expected in the study. However, it requires having a separate dataset that is representative of the various modalities, which may not always be available.

For smaller datasets or when data is limited, a transformation-based approach can be applied. Training on all experimental data but using rotated frames (90°, 180°, and 270°) ensures that the denoiser is exposed to the inherent noise and variability in the data while mitigating direct exposure to the frames being denoised. This approach is particularly resource-efficient but may result in a less robust model due to the potential predictability of rotated frames.

Each of these methods has its merits and should be chosen based on the specific context and availability of data within a given study.

# explore the possible parameters

> astrocast train-denoiser --help

# train model from config file

> astrocast --config config.yaml train-denoiser

The intricacies of training a custom model are beyond this protocol, and we refer to the original DeepInterpolation publication (Lecoq et al., 2021) and our example notebook for users interested in training their own model.

3.2.3.2 Denoising dataOnce the neural network has been trained for denoising, it can be employed on new data using the parameters that were set during its training (Figure 3). If utilizing a provided pre-trained model, these parameters are inferred from the model file.

Figure 3. Effect of denoising on astrocytic calcium imaging data. (A) A single 256 × 256 pixel frame prior to denoising, where background noise is evident. (B) The same frame following denoising with enhanced clarity. (C, E, G, I) Pixel intensity over 400 frames (50s) before the application of the denoising algorithm, showcasing the original signal variation, after normalization. (D, F, H, J) Corresponding traces after denoising, illustrating a stabilized intensity profile, with preserved signal characteristics. The same normalization parameters were applied to both original and denoised traces to ensure comparability of noise levels. Location of pixels are indicated with triangles in (A, B). The denoising algorithm was executed on a (128, 128) field of view, incorporating a context of five adjacent frames for each target frame, with no gap frames. Parameters for the denoising model included a training period of 50 epochs, a learning rate of 0.0001, momentum of 0.9, and a stack of three layers with 64 kernels of size three in the initial layer, omitting batch normalization. During inference, a strategy of a 10-pixel overlap in all directions, complemented by “edge” padding, was employed.

For trivial input parameters, such as the model path and output file location, the following flags are used: –model to specify the model, –output-file to define the output file, –loc-in and –loc-out to designate the input and output data locations within the HDF5 file structure.

Network architecture parameters are crucial for ensuring that the denoising process is compatible with the data's specific attributes. These parameters need to exactly match the parameters during training. These include –input-size to set the dimensions of the input data, –pre-post-frames to determine the number of frames before and after the target frame used during denoising, –gap-frames to optionally skip frames close to the target frame, and –normalize to apply normalization techniques that aid the network in emphasizing important features over noise.

Image parameters cater to the post-processing needs of the denoised data. The –rescale parameter is used to reverse the normalization applied during denoising, as subsequent analysis steps often expect the pixel values to be in their original scale. Furthermore, –padding adjusts the input data size to match the network's expected input dimensions, ensuring that no data is lost or distorted during the denoising process.

While denoising imaging data can reduce noise, it is optional and careful consideration of research needs beforehand ensures that important biological signals aren't inadvertently removed. It may not suit analyses of fast transient signals (<300 ms) as it might obscure crucial biological events (Héja et al., 2021; Cho et al., 2022; Georgiou et al., 2022). Notably, increasing acquisition speed can help preserve rapid events, as it provides the denoising model with more frames to accurately identify rapid changes.

# load a config file with parameters

> astrocast --config config.yaml denoise

''/path/to/file''

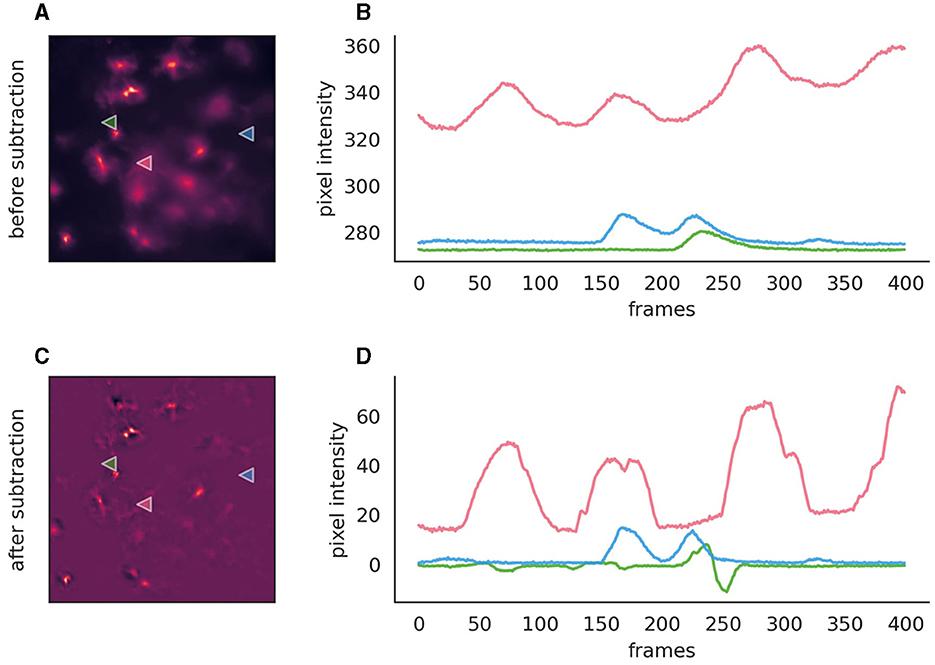

3.2.4 Background subtraction (optional)Background subtraction, while optional, can significantly enhance event detection in fluorescence imaging by mitigating issues related to bright backgrounds, uneven lighting, or pronounced bleaching effects (Figure 4). AstroCAST leverages Radial Basis Function (RBF) interpolation for background approximation, ensuring accurate background modeling even in scenarios characterized by considerable signal fluctuations or missing data segments.

Figure 4. Comparison before and after the application of background subtraction to fluorescence imaging data. (A, C) Fluorescence images before and after background subtraction, respectively, illustrating the removal of extraneous noise. After subtraction, the background is close to zero and exhibits overall lower noise levels. (B, D) Intensity traces corresponding to the marked points on the images are plotted over time (400 frames, 50s). These traces highlight the efficacy of background subtraction, with the post-subtraction traces approaching zero on the y-axis, thereby indicating a substantial reduction of background, while signal amplitude is conserved. However, the signal shapes, especially in the red trace, exhibit slight alterations post-subtraction. Moreover, the green trace reveals the inadvertent introduction of spurious events, serving as a cautionary example of how background subtraction can inadvertently affect data integrity if parameters are not judiciously optimized.

To facilitate optimal subtraction outcomes without introducing artifacts such as false positives or negatives, default parameters are provided, but fine-tuning may be necessary. An exploratory Jupyter notebook is available for guidance on how parameter adjustments influences the results.

The process begins with downsizing the video to manage computational load while preserving essential features. Peaks within each pixel's time series are identified and marked as NaN to exclude them from influencing the background model. The RBFInterpolator, implemented in SciPy (Virtanen et al., 2020), then estimates the background by interpolating these NaN values across the XYZ dimensions of the video. After resizing back to its original dimensions, the interpolated background is subtracted.

For initial image preparation, parameters such as –scale-factor for adjusting video resolution, –blur-sigma and –blur-radius for image blurring, are crucial. These adjustments are preparatory steps aimed at enhancing the effectiveness of the subsequent background subtraction process.

Peak detection is fine-tuned through parameters like –prominence, –wlen for window length, –distance between peaks, –width of peaks, and –rel-height defining the peak's cutoff height. These settings ensure precise identification of significant peaks, contributing to the accuracy of the background modeling.

The RBF interpolator constructs a smooth function from scattered data points by combining radial basis functions centered at data locations with a polynomial term (Fasshauer, 2007), adjusted by coefficients that solve linear equations to fit the data. Interpolation can be tunes with parameters such as –neighbors for the interpolation neighborhood, –rbf-smoothing for interpolation smoothness, –rbf-kernel to choose the kernel type, –rbf-epsilon, and –rbf-degree for kernel adjustment, facilitating a tailored approach to background modeling.

Background subtraction can be executed using either the Delta F (–method ‘dF') method, which directly subtracts the background, or the Delta F over F (–method ‘dFF') method, which divides the signal by the background post-subtraction. The choice between these methods depends on the specific requirements of the imaging data and the desired outcome of the subtraction process. However, it's important to consider that background subtraction may not be necessary for all datasets and could potentially introduce biases in event detection. Comparative analysis with and without this preprocessing step is recommended to assess its impact accurately (Supplementary material).

> astrocast subtract-background --h5-loc ''mc/ch0''

--method ''dF'' ''/path/to/file''

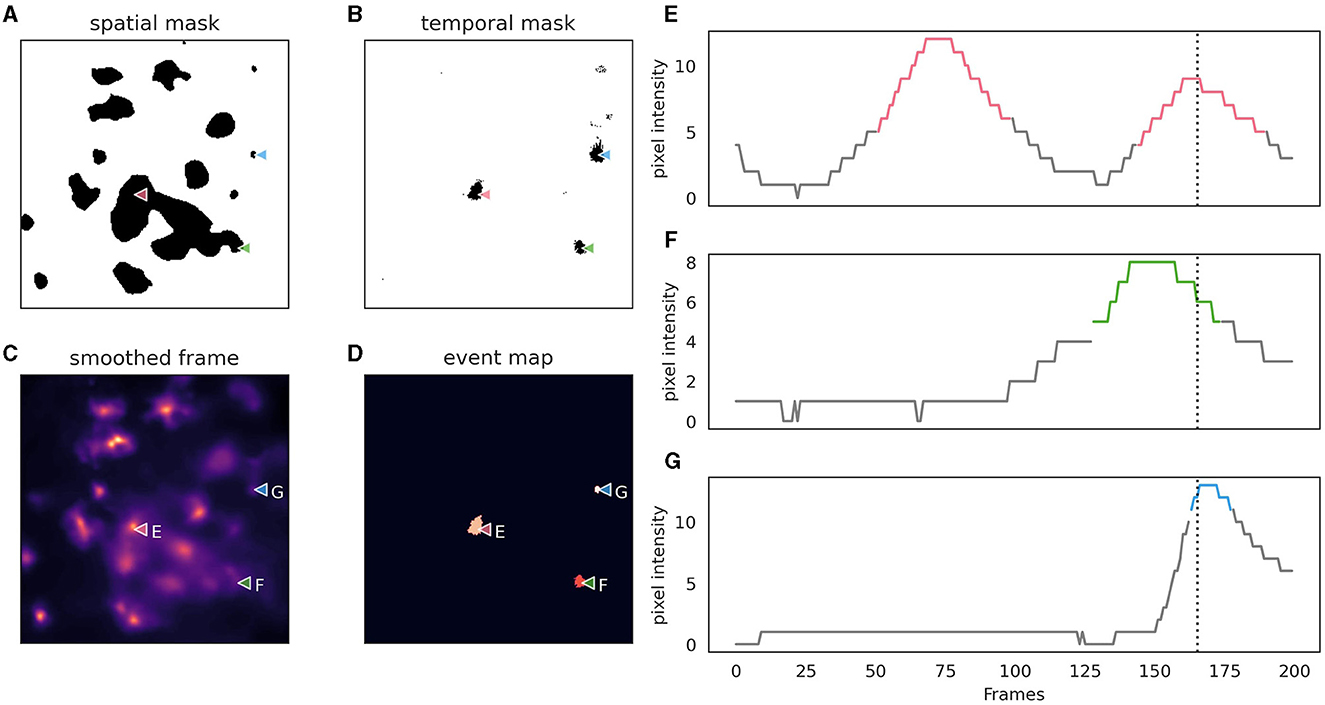

3.3 Event DetectionAstroCAST employs an event-centric approach to event detection, adapted from the AQuA package (Wang et al., 2018), and leverages the capabilities of scikit-image and dask for enhanced processing and analysis (van der Walt et al., 2014; Dask Development Team, 2016). Building on the AquA algorithm, astroCaST incorporates both spatial and temporal thresholding techniques to identify and extract events effectively (Figure 5). Spatial thresholding is executed based on either a single frame or a small volume, facilitating the identification of significant patterns or features in space. Concurrently, temporal thresholding is implemented by scrutinizing individual pixels for peaks across the time dimension, essentially tracking fluorescence changes over time.

Figure 5. Depiction of astrocytic event detection employed by astroCaST using spatial and temporal thresholding. (A) Binary mask of frame after application of spatial threshold (min_ratio 1). (B) Binary mask of framer after application of temporal threshold (prominence 2, width 3, rel_height 0.9). (C) Frame used for thresholding after motion correction, denoising and smoothing. (D) Events detected as identified by both spatial and temporal thresholding. (E–G) Pixel intensity analysis for selected pixels [as indicated in (A–D)], with active frames color-coded in the plots. The frame shown in (A–D) is indicated as a vertical dotted line.

Following the thresholding process, a binary mask is generated, which is used to label connected pixels, considering the full 3D volume of the video. This allows for the integration of different frames in the z-dimension to constitute a single event in a 3D context, ensuring a comprehensive analysis of the events captured in the video. Upon successful event detection, our protocol generates a folder with a .roi extension, which houses all files related to the detected events, offering an organized and accessible repository for the extracted data.

The event detection step is the most critical aspect of astroCaST and is sensitive to the choice of parameters. We have therefore implemented an interactive interface to explore the performance of each step during event detection given the relevant options. AstroCAST will prompt a link to the browser-based interface. We recommend to select a short range of frames in the video file to keep processing time during exploration short.

> astrocast explorer -- input path ''/path/to/file''

-- h5-loc ''/dataset/name''

Once users are satisfied with the chosen parameters, they can be exported to a YAML config file, that can be provided to the astrocast CLI.

3.3.1 Detection module descriptionThe detection module in AstroCAST applies a comprehensive approach to identify astrocytic events by smoothing, thresholding, and applying morphological operations on fluorescence imaging data. This section outlines the key steps and parameters used in the process.

3.3.1.1 SmoothingAstroCAST incorporates a Gaussian smoothing kernel to enhance event detection while preserving spatial features. Adjusting the --sigma and --radius parameters allows for the refinement of the smoothing effect, ensuring that events are emphasized without compromising the integrity of spatial characteristics. The smoothed data then serve as the basis for subsequent detection steps, although users can opt out of smoothing if desired.

3.3.1.2 Identifying active pixelsThresholding in AstroCAST is executed in two phases, spatial and temporal, to classify pixels as active with high precision. Spatial thresholding evaluates the entire frame to distinguish active pixels, automatically determining a cutoff value. It incorporates the mean fluorescence ratio of active to inactive pixels to mitigate the incorrect identification of noise as events. The --min-ratio parameter sets the minimum ratio threshold, and the --z-depth parameter allows for the consideration of multiple frames to improve threshold accuracy. Temporal thresholding analyzes the video as a series of 1D time series, identifying peaks with a prominence above a user-defined threshold. Parameters such as --prominence, --width, --rel-height, and --wlen fine-tune the detection of events over time, enhancing the separation of true events from noise. Combining spatial and temporal thresholding, users can choose to merge or differentiate the active pixels identified by each method, optimizing event detection based on specific dataset characteristics.

3.3.1.3 Morphological operationsTo address artifacts resulting from thresholding, such as holes (false negatives) and noise (false positives), AstroCAST employs morphological operations. Parameters like --area-threshold and --min-size adjust the maximum hole size to fill and the minimum size of active pixel clusters, respectively. These operations, which also consider connectivity and the --z-depth parameter, refine the detection outcome by smoothing gaps and removing minor artifacts, enhancing the clarity and segmentation of detected events.

3.3.1.4 Additional optionsAdditionally, AstroCAST provides the option to exclude the video border from active pixel detection (--exclude-border) to mitigate motion correction artifacts. An experimental feature (--split-events) is available for separating incorrectly connected events, further improving the accuracy of event detection.

3.3.2 Evaluating detection qualityAstroCAST offers a streamlined approach for assessing the quality of event detection through its command-line interface.

> astrocast view-detection-results ''/path/to/roi''

Researchers can visually inspect the detection outcomes. During this evaluation phase, it is crucial to determine whether the detection process has successfully identified all expected events, thereby minimizing false negatives, and to assess the correctness of these events to ensure that noise has not been misclassified as true events, which would indicate false positives. If the detection results are not satisfactory, revising the event detection parameters or adjusting settings in the preceding background subtraction step (Section 3.2.4) may be necessary to achieve improved accuracy. For a dynamic visualization that illustrates the successful identification of events we included an example video (Supplementary material).

3.3.3 Comparison of astroCaST and AQuAWe evaluated the performance of astroCaST against the current state-of-the-art astrocytic calcium imaging toolkit, AQuA, utilizing a tailored synthetic dataset. Our findings reveal that astroCaST was approximately ten times faster and using only half as much memory as AQuA (Figures 6A, B). A notable feature of astroCaST is its peak memory usage, which approaches a maximal limit, indicative of its efficient memory management facilitated by lazy data loading techniques. Both toolkits, however, exhibited tendencies to overestimate the number of detected events (Figures 6C, D). Despite this, the estimations made by astroCaST were consistently closer to the true values. These tests were conducted using default parameters for both toolkits to ensure an unbiased comparison. However, adjusting parameters tailored to the dataset can improve the detection accuracy of both toolkits. For example, filtering the detected events from astroCAST using the signal-to-noise ratio column yielded nearly identical detection matches to the generated events.

Figure 6. Performance comparison of astroCaST and AQuA on synthetic calcium imaging datasets. (A, B) Evaluation of computational efficiency for astroCaST and AQuA across synthetic video datasets of varying dimensions (100–5,000 frames, 100 × 100–1,200 × 1,200 pixels). (A) Graphical representation of runtime for each algorithm. (B) Analysis of peak memory consumption during the event detection phase. A run was considered failed if it exceeded available memory (76.8 GB). (C, D) Assessment of the accuracy in detecting astrocytic calcium events by astroCaST (C) and AQuA (D) without initial preprocessing steps. Detected events smaller than 5 and larger than 1,000 frames were excluded for visualization purposes. The dotted line marks the ideal correlation between the synthetic events generated and those identified by the algorithm.

3.4 Embedding 3.4.1 Quality control and standardizationAfter successful detection of events, users need to control the quality of the result to ensure data integrity for downstream analysis. In astroCaST this step optionally includes filtering and normalization. AstroCAST also features a dedicated GUI to guide selection of filter and normalization parameters.

> astrocast exploratory-analysis --input-path

''/path/to/roi/'' --h5-loc ''/dataset/name''

Here, we are going to showcase the steps using the astroCaST python package directly. Users are directed to our example notebook to follow along. Of note, large datasets might require significant computational time. Hence, we recommend to utilize dynamic disk caching, via the cache_path parameter, to mitigate redundant processing.

from pathlib import Path

from astrocast.analysis import Events, Video

# load video data

video_path = 'path/to/processed/video.h5'

h5_loc='df/ch0' lazy = False

# flag to toggle on-demand loading;

slower but less memory

video = Video(data=video_path, loc=h5_loc,

lazy=lazy)

# load event data

event_path = 'path/to/events.roi'

cache_path=Path(event_path).joinpath('cache')

eObj = Events(event_dir=event_path, data=video,

cache_path=cache_path)

The events object has an events instance, which contains the extracted signal, as well as their associated attributes (for example ‘dz', ‘x0') and metrics (for example ‘v_area', ‘v_signal_to_noise_ratio'). An explanation of all columns can be found in the class docstring help(Events).

3.4.1.1 FilteringTypically, datasets undergo filtering to adhere to experimental parameters or to eliminate outliers. Although a detailed discourse on filtering protocols is outside this document's scope, we address several prevalent scenarios. For astrocytic events, imposing a cutoff for event duration is beneficial, especially when distinguishing between rapid and protracted calcium events. Moreover, applying a threshold for the signal-to-noise ratio helps to discard events with negligible amplitude. Although it seems optimal to include as many events as possible, practical issues such as computational limits and the risk of mixing important events with irrelevant data require a selective approach. Additionally, filtering aids in removing ou

Comments (0)